Seeing People in Any Light: YOLOv7’s Multispectral Magic for Pedestrian Detection

Hey There, Let’s Talk About Spotting People!

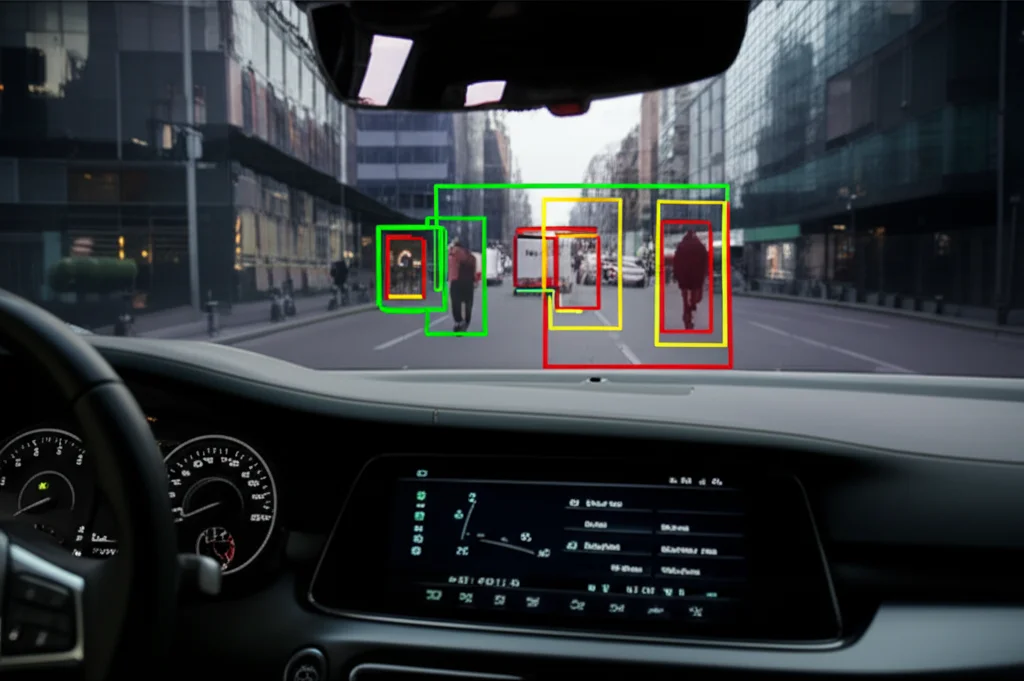

You know how sometimes you see those fancy self-driving cars or super-smart security cameras? A huge part of what makes them work is being able to spot people walking around – we call that pedestrian detection. Easy enough on a sunny day, right? But what happens when it’s dark, foggy, or someone’s partly hidden behind a lamppost? That’s where things get tricky!

For ages, folks in computer vision have been working on this. Back in the day, it was all about looking for edges and shapes. Pretty basic, and honestly, not great when the lighting changed or people looked a bit different. Then came the revolution: deep learning, especially with things like Convolutional Neural Networks (CNNs). These networks got way better at learning complex stuff from images automatically, boosting accuracy big time.

Even with awesome CNN-based methods like YOLO (You Only Look Once, how cool is that name?) and Faster R-CNN, those tough conditions – like poor light or obstructions – still caused headaches. Plus, these powerful methods can sometimes be a bit slow and need a lot of computing power. Not ideal if you need real-time detection, like in a moving car!

Giving Computers Night Vision: The Multispectral Idea

So, what’s the next step? Well, our regular cameras (the RGB ones, like on your phone) are great for color and detail when there’s light. But thermal cameras, which see heat, are fantastic in the dark or through fog. They’re *complementary* – they offer different but useful information. The smart idea is: why not use *both*?

That’s the core of multispectral pedestrian detection. It’s about combining data from these different “eyes” to get a more complete picture. This research dives deep into exactly *how* to best combine this RGB and thermal data using a powerful framework called YOLOv7.

The Big Question: How Do You Mix the Signals?

Think of it like mixing ingredients when you’re cooking. You could mix everything right at the start (early fusion), mix some things in the middle (halfway fusion), or cook everything separately and then just combine the finished dishes (late fusion). This study put these three main strategies to the test within the YOLOv7 network to see which one works best for spotting pedestrians using multispectral data.

- Early Fusion: This is like mixing the RGB and thermal images pixel-by-pixel right at the very beginning, before the network even starts really processing them. It’s simple, but it might struggle to understand the deeper meaning (semantic information) later on.

- Halfway Fusion: Here, the network processes the RGB and thermal data separately for a bit, extracting some initial features. Then, at the *middle* layers of the network, it combines these features. This seems promising because it gets some early details but also starts to understand the scene better before mixing.

- Late Fusion: In this approach, the network processes the RGB and thermal data almost completely independently, extracting high-level features from each. The fusion happens right at the end, combining the *results* or final features before making a detection. This can be very accurate because each modality gets its full processing, but it might be slower.

They ran a bunch of experiments comparing these strategies on multispectral pedestrian detection tasks. The goal was to figure out which fusion approach gives the best performance – meaning high accuracy and good speed.

The Winner Is… Halfway Fusion!

After all the testing, the results were pretty clear: the halfway fusion strategy came out on top! It showed outstanding performance for multispectral pedestrian detection. It achieved high detection accuracy while still maintaining a relatively fast speed. This suggests that blending the information from the different sensors at the intermediate layers of the network is the most effective way to leverage their complementary strengths.

This is a big deal because it means we can potentially build systems that are much better at spotting people in all sorts of challenging real-world conditions – not just on sunny days. It balances getting detailed information from both types of cameras with understanding what those details mean in the context of finding a person.

Adding Some Secret Sauce: CFCM and MCSF Modules

The researchers didn’t stop there. They also introduced some clever additions to their YOLOv7-based network to make the fusion even better. They call these the CFCM (Cross-Modal Feature Complementary Module) and the MCSF (Multi-Scale Cross-Spectrum Fusion) modules.

The CFCM module is pretty neat. It helps the network learn from one modality (say, thermal) to improve its understanding of the other modality (RGB), especially when one is struggling (like RGB in the dark). It’s like one camera whispering tips to the other: “Hey, I see a heat signature here, maybe look closer in your blurry image!” This helps recover information that might be lost in one sensor feed, reducing missed detections.

The MCSF module is smart about lighting. It adaptively adjusts how much it trusts the RGB versus the thermal data based on how bright it is. During the day, it might lean more on the detailed RGB data. At night, it relies more heavily on the reliable thermal data. This adaptive weighting helps achieve optimal fusion performance regardless of lighting conditions.

By integrating these modules into the halfway fusion architecture, the network becomes even more robust and accurate. The ablation studies (experiments where they tested the network with and without these modules) showed that adding them significantly improved performance, especially in tough scenarios.

Putting It to the Test: Datasets and Results

To prove their point, they tested their method extensively on two well-known multispectral pedestrian datasets: KAIST and UTOKYO. These datasets include tons of images captured under various conditions, including different times of day, occlusions, and scales.

They measured performance using standard metrics like miss rate (how many people were missed), precision (how many detections were correct), recall (how many actual people were detected), F1 score (a balance of precision and recall), mAP (mean Average Precision, a common object detection metric), and FPS (frames per second, for speed).

The results were impressive! Their YOLOv7-based method with halfway fusion and the special modules achieved the lowest miss rate on the KAIST dataset compared to many other state-of-the-art methods, including both faster single-stage detectors and more complex two-stage detectors. Crucially, it performed particularly well at night, confirming the benefit of using thermal data and the smart fusion strategy.

On the UTOKYO dataset, which also presents challenging conditions, their method again outperformed others, showing its effectiveness across different data sources.

Why This Matters for the Real World

Okay, so they built a better pedestrian detector. Why should you care? Because this kind of technology is crucial for making our world safer and smarter.

- Intelligent Transportation: Think self-driving cars that can reliably see people even in bad weather or at night, preventing accidents.

- Security and Surveillance: Cameras that can spot intruders or people in low-light areas more effectively.

- Robotics: Robots that need to navigate environments with people, regardless of the conditions.

The fact that their method is not only accurate but also relatively fast (achieving 12.5 FPS on their test setup) means it’s practical for real-time applications. It strikes that important balance between seeing well and seeing fast.

What’s Next?

Science never stops! The researchers mention that future work could involve exploring even more advanced ways to combine the features from the two modalities, maybe using different types of “attention” mechanisms to help the network focus on the most important information. They also want to make the network even lighter and faster, pushing the boundaries for real-time performance.

Wrapping It Up

So, there you have it. This research shows that by smartly combining the strengths of visible light and thermal cameras using a powerful network like YOLOv7, and specifically by fusing the information in the middle layers (halfway fusion), we can significantly improve pedestrian detection. It’s a big step towards making systems that can reliably see people anytime, anywhere, paving the way for safer and more intelligent technologies.

Source: Springer