Giving Robots a Soul? Diving into Personality e Cognitive Simulation

Hey there! Let’s chat about something pretty wild that’s happening in the world of robots. You know how sometimes interacting with technology can feel a bit… well, robotic? Flat, predictable, maybe even a little cold? What if we could change that? What if robots could have something akin to a *personality*?

That’s exactly what some brilliant minds have been digging into, and let me tell you, it’s fascinating. We’re talking about giving robots traits, quirks, maybe even a bit of ‘soul’ using the power of those big language models, like GPT-4, that everyone’s buzzing about. It’s not just about making them chat better; it’s about building a whole *cognitive system* that lets them think, feel (in a simulated way, of course!), remember, and even understand a bit about *us*.

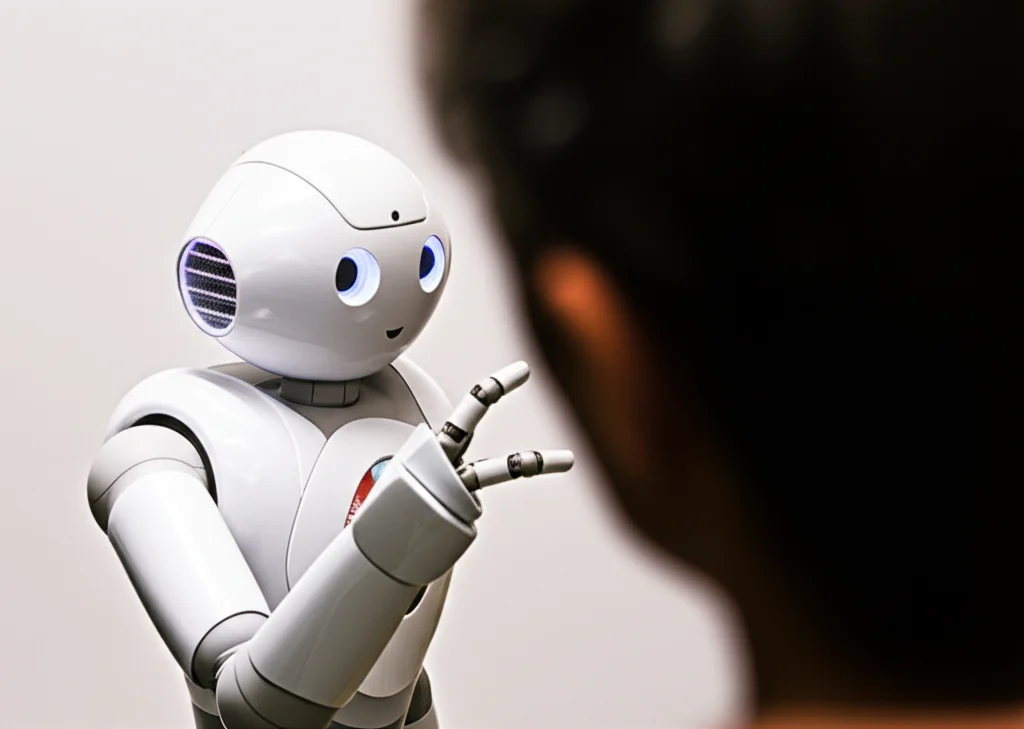

Think about it: human-robot interaction (HRI) is becoming a bigger deal every day. Robots are popping up everywhere, from factories to our homes. And while the idea of a super human-like robot might give some folks the creeps initially (that’s the famous *uncanny valley* effect!), studies show that once you actually *interact* with a robot that has a personality, things change. People actually *prefer* robots with distinct personalities! It makes the interaction smoother, more engaging, and honestly, just more enjoyable.

Now, figuring out the *right* personality for a robot isn’t a one-size-fits-all deal. It totally depends on what the robot is supposed to *do*. A robot helping in a hospital might need a different vibe than one serving coffee or helping kids learn. People can totally tell the difference between robot personalities, and their preferences shift based on whether the robot is focused on getting a job done or just hanging out and providing an experience. We’ve even seen robots designed with introverted or extroverted traits to help people recover from strokes! It turns out, tailoring a robot’s behavior to the user’s personality can really boost performance and make the user feel better about the interaction.

Past research often leaned heavily on the *Big Five* personality model (you know, Extraversion, Agreeableness, Conscientiousness, Neuroticism, Openness) to give robots a bit of character. And yeah, that’s a start. But the problem was, these robots were mostly just mimicking personality in their *conversation*. They didn’t really have the underlying *thoughts* or *decisions* that drive human personality. It was like a good actor reading lines but not truly *feeling* the role.

That’s where this new research comes in. The goal? To create a robot personality model and a *cognitive robot framework* that doesn’t just slap on a personality layer but actually *simulates* a comprehensive personality with built-in cognition. It’s about creating something closer to a *digital twin* of an individual’s personality, not just a chatbot with a predefined style.

Beyond the Big Five: Building a Deeper Personality

So, how do you build a personality that’s more than just five sliders? This research proposes a model that goes deeper than just the Big Five. It pulls from other psychological theories:

- Cattell’s 16 Personality Factors (16PF): This dives into more specific inner traits and attitudes.

- George Kelly’s Role Construct Repertory: This looks at how individuals interpret the world and others, focusing on observable attributes from a societal perspective.

- Preferences: Simple, but crucial – what the robot likes and dislikes!

By combining these, you get a richer, more nuanced personality than just the standard five dimensions. It’s about creating an agent that’s *anthropomorphized* (made to seem human-like) based on solid psychological ideas.

But personality isn’t just a set of traits; it’s shaped by *experience* and driven by *internal processes*. That’s where the cognitive framework comes in.

The Robot’s Inner World: The Cognitive Framework

Imagine giving a robot a brain that can actually process information, form intentions, feel simulated emotions, and *remember* things. That’s the essence of this cognitive robot framework. It’s built using a state-space realization model, which is a fancy way of saying it tracks the robot’s internal state and the environment’s state over time.

The core idea is that the robot’s actions and responses aren’t just random or pre-programmed; they’re the result of complex internal processing, much like humans. And guess what’s driving a lot of this processing? You guessed it – Large Language Models (LLMs), specifically GPT-4 in this case, powered by clever *prompting techniques*. LLMs are incredibly good at understanding and generating human-like text, which makes them perfect for simulating these cognitive functions.

Let’s peek inside this cognitive engine:

Memory: More Than Just Chat History

Humans have different kinds of memory, right? Short-term (remembering what someone just said) and long-term (remembering past events, experiences, knowledge). A truly cognitive robot needs this too.

- Short-Term Memory (STM): This is handled by a buffer that keeps track of the recent conversation turns. It’s like the robot’s working memory, helping it follow the immediate flow of dialogue.

- Long-Term Memory (LTM): This is where things get interesting. LTM isn’t just stored as raw text. It’s encoded using techniques like *document embedding*, turning text into numerical vectors that capture meaning. Memories are also tagged with *timing* information. Retrieving memories involves sophisticated algorithms (like MMR) that find relevant information based on the current conversation, balancing relevance and diversity. This allows the robot to recall past interactions or knowledge relevant to the current chat, making its responses more informed and consistent with its ‘experience’.

Think about how your own past experiences shape your current conversations and reactions. That’s the kind of effect they’re trying to simulate here.

Intention e Motivation: Why Does the Robot Say That?

Humans talk with purpose, right? We have intentions – we want to inform, persuade, ask, or just connect. A robot with personality needs intentions too. This framework includes a *motivation unit* driven by simulated ‘desires’ or objectives, somewhat inspired by things like Maslow’s hierarchy of needs.

The robot has a *planning function* that figures out the best strategy for its next response based on its current objective, short-term memory, environment, and even its simulated emotion. This ensures the robot’s dialogue isn’t just reactive but *intention-driven*, making it feel more purposeful and human-like.

Emotion Generation: Feeling (Sort Of) the Vibe

Okay, robots don’t *feel* emotions like we do. But they can *simulate* emotional responses based on the situation. This framework has an *emotion generative function* that calculates an appropriate emotion based on factors like whether the robot feels ‘offended’ (leading to simulated anger or fear) or whether the current situation aligns with its objective or predictions about the future (leading to happiness, sadness, disappointment, surprise). These simulated emotions then influence the robot’s response, making it more dynamic and expressive.

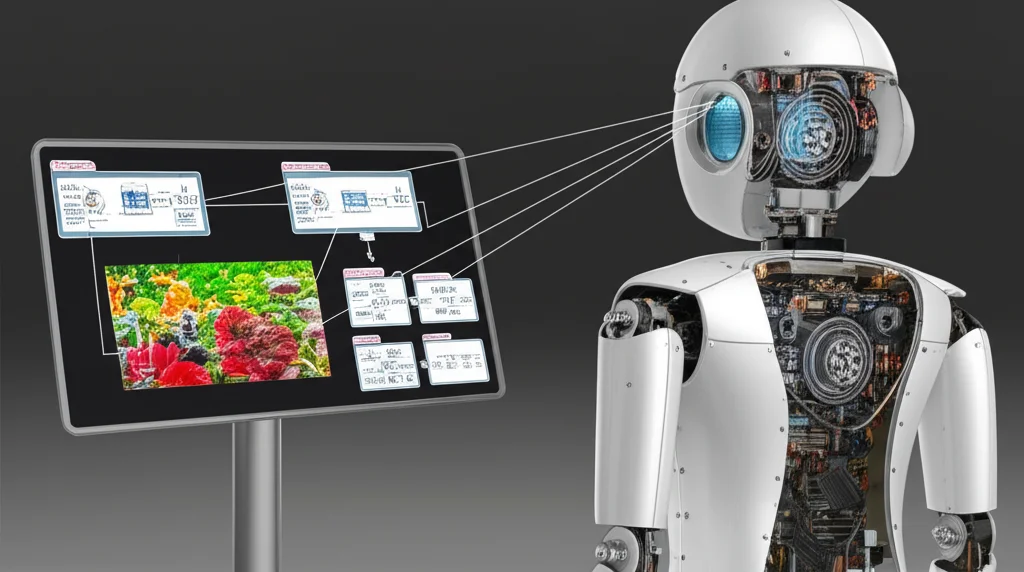

Visual Processing: Seeing the World

Beyond just text, the framework can process visual information. It takes an image and the user’s text query, generates a description of the image, and uses an *attention mechanism* to focus on relevant parts of the picture based on the conversation. This means the robot can actually ‘see’ and understand what you’re talking about when you show it a picture, integrating visual cues into its cognitive process and responses.

Putting it All Together: The Inference Engine

All these cognitive pieces – memory, intention, emotion, visual input – feed into an *inference function*. This is where the magic happens. The inference unit takes all this internal information, combines it with the user’s input, and decides on the robot’s behavior – what it says, what action it takes. The core of the simulated personality lives here, guided by the prompting templates that define the robot’s rules, personality traits (internal and external), background, and speaking tone.

Meet Mobi: The Robot Test Subject

This whole framework was put to the test on a service robot named Mobi. Mobi isn’t just a chatbot on a screen; it’s a physical robot with a depth camera, touch panel, arms, and chassis. For this research, Mobi’s personality was configured to be similar to one of the researchers, but with enhanced agreeableness (because, you know, friendly robots are nice!).

Testing the Personality and the Mind

So, did it work? Could Mobi really simulate a personality and cognitive processes? They put it through some rigorous tests.

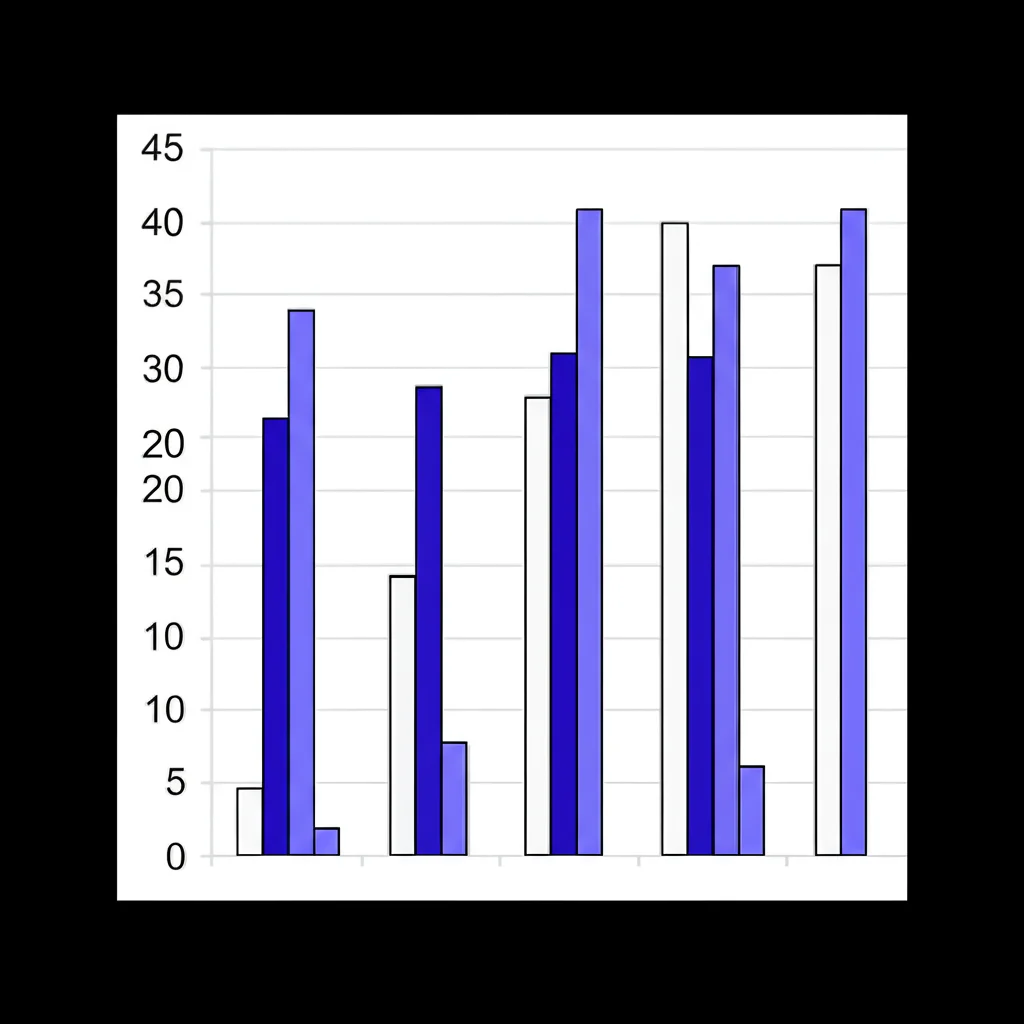

First, they used standard human personality assessments, the *IPIP-NEO* and the *Big Five Inventory (BFI)*, to measure Mobi’s configured personality traits. They compared Mobi’s results to the researcher’s profile and also ran 30 personality simulations which were compared against results from 31 human subjects.

The results were pretty convincing!

- Constancy: Mobi’s personality scores were highly correlated between the two different assessment scales (IPIP-NEO and BFI), showing the simulation was consistent.

- Effectiveness: Mobi’s scores also correlated well with the researcher’s target profile, meaning it effectively simulated the desired personality.

- Construct Validity: Comparing the 30 simulations to the 31 human subjects confirmed that the personality model itself holds up, demonstrating reliability and validity similar to human responses on these tests. It could encompass all the Big Five traits, even though the model was built using Cattell and Kelly.

Beyond just personality traits, they tested Mobi’s *Theory of Mind (ToM)*. ToM is that human ability to understand that others have their own thoughts, beliefs, and intentions, which might be different from our own. It’s crucial for social interaction.

They used classic tests like the *Sally-Anne test* and a more complex *ToMi dataset* designed specifically for evaluating AI’s social cognition. Mobi passed the Sally-Anne test instance, showing it could understand false beliefs. On the ToMi dataset, which tests understanding reality, memory, first-order beliefs (what A thinks), and second-order beliefs (what A thinks B thinks), Mobi performed really well, especially on memory and second-order beliefs – arguably the more complex aspects of ToM! This suggests the cognitive architecture is doing a good job of simulating how humans reason about others’ minds.

They even demonstrated Mobi handling real-world-ish scenarios:

- Visual Conversation: Mobi could discuss an image, using its visual processing and memory (recalling a preference for strawberry macarons based on a pink one in the picture).

- Handling Conflict: Mobi could recognize a potentially provocative situation and devise a strategy to avoid conflict, reacting in a way consistent with its programmed personality and simulated emotions.

- Understanding Intent: Mobi could understand a user’s request to set a reminder, interpreting the underlying intention and taking an appropriate action.

What’s Next? The Road Ahead

This is all incredibly promising, but it’s still early days. One big challenge is *latency*. Right now, the processing takes 10-15 seconds, which is fine for texting but too slow for smooth verbal conversation. As LLMs get faster, this should improve.

Another piece is adding non-verbal communication – think tones of voice, facial expressions (if the robot has a face!), gestures. Personality isn’t just what you say, but *how* you say it.

And the big question: what happens over *long-term* interaction? Will the simulated personality hold up over days or months? Will the long-term memory start to dominate the programmed traits? How does this personality design really affect how users feel about the robot over time? These are all areas for future research.

Imagine dynamic personality models that can *evolve* as the robot interacts and learns! Or using this framework to create digital twins that can help predict human behavior based on their personality and experiences. The potential is huge.

Wrapping Up

So, there you have it. This research is a fantastic step towards creating robots that are more than just tools; they’re becoming agents with simulated personalities and cognitive functions that allow for much richer, more human-like interactions. By combining advanced LLMs with psychological theories of personality and cognition, we’re getting closer to a future where robots aren’t just smart, but genuinely engaging and perhaps, in their own way, a little bit soulful. It’s an exciting time in robotics and AI!

Source: Springer