Big Kernels, Better Diagnosis: Revolutionizing Plant Disease Detection with RepLKNet

Hey there, Future Farmers (and Tech Enthusiasts)!

Ever thought about the silent battles happening in fields and gardens around the world? I’m talking about plant diseases. They’re a huge headache, threatening our food supply and livelihoods. For ages, spotting a sick plant often relied on keen human eyes, which is tough work, especially across vast areas.

But guess what? Technology is stepping up! Deep learning, that fancy branch of Artificial Intelligence, has become a superstar at recognizing patterns in images. This is a game-changer for identifying plant diseases faster and more accurately than ever before. We’re talking about using powerful computer vision models to be like super-powered plant doctors!

The Problem with Tiny Magnifying Glasses

Now, a lot of the early deep learning models that got really good at image tasks, especially the classic Convolutional Neural Networks (CNNs), relied on using small “kernels.” Think of a kernel like a tiny magnifying glass that the network slides over an image, looking for features. These kernels are usually really small, like 3×3 or 5×5 pixels. They’re fantastic for picking up fine details – edges, textures, small spots.

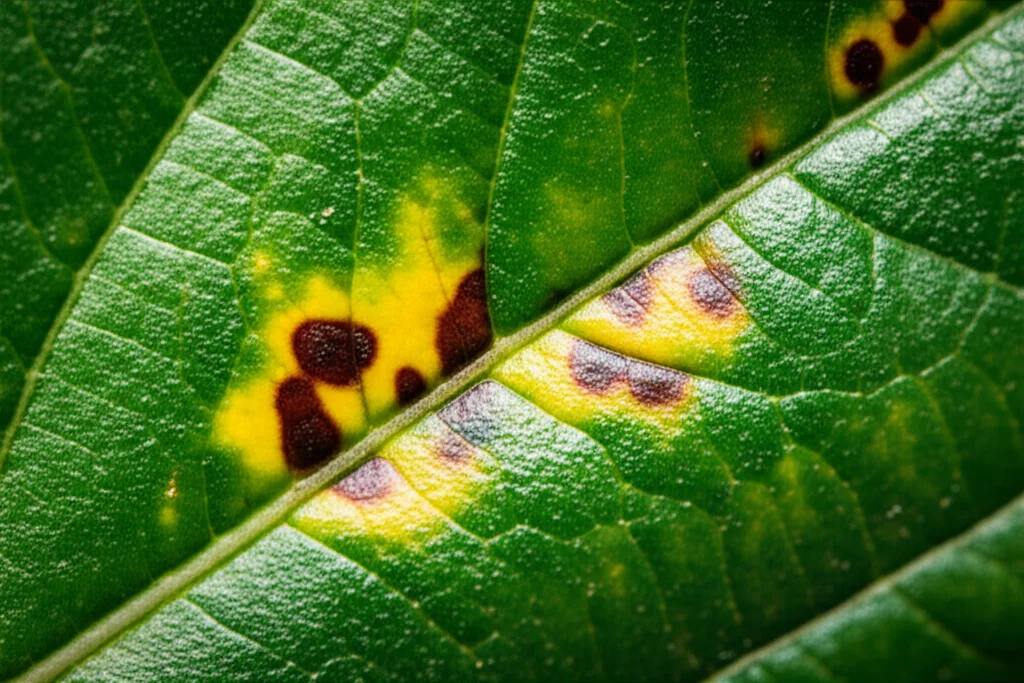

But here’s the catch: plant diseases often show up as large, irregular patches, blights, or patterns spread across a leaf or stem. Those tiny kernels struggle to see this “big picture.” To capture these larger patterns, traditional CNNs have to stack *many* layers of these small kernels, making the network super deep. This deep stacking can lead to problems like losing information along the way or making the model really complex and slow to train. It’s like trying to understand a whole mural by only looking at tiny squares through a small window!

Enter RepLKNet: The Big Kernel Hero

So, what if we used a *much bigger* magnifying glass? That’s the brilliant idea behind RepLKNet! This is a newer type of convolutional network designed specifically to handle *very large* kernels – we’re talking kernels as big as 31×31 pixels! This dramatically expands the network’s “receptive field,” meaning it can see and process a much larger area of the image in a single go. Why is this cool for plant diseases? Because it lets the model directly capture those sweeping, global patterns and irregular shapes that are characteristic of many plant illnesses.

Now, you might think, “Wouldn’t using such huge kernels be incredibly slow and computationally expensive?” And traditionally, you’d be right! But RepLKNet is clever. It uses some neat architectural tricks, like structural re-parameterization and depthwise separable convolutions, that make these large kernels much more practical and efficient than they used to be. It gives us the power of big kernels without the crippling computational cost.

The Grand Experiment

To really test if RepLKNet’s big kernels were the answer for plant disease recognition, we needed a serious dataset. We used a massive one called the Plant Diseases Training Dataset. It’s got over 95,000 images covering 14 different plant species and 61 distinct categories of diseases (plus healthy samples, of course!). It’s a wonderfully diverse collection, showing all sorts of symptoms on different leaves and plants. This kind of scale and variety is crucial for training a model that can handle the real world.

We trained our RepLKNet model on this dataset. We used a technique called transfer learning, which is like giving the model a head start by using knowledge it gained from seeing millions of other images (specifically, from training on the huge ImageNet dataset). Then, we fine-tuned it specifically for spotting plant diseases. We also used a rigorous testing method called 5-fold cross-validation, which basically splits the data into five different groups and trains/tests the model five times, using a different group for testing each time. This helps ensure the results are robust and not just lucky for one particular data split.

The Results Are In!

So, how did RepLKNet do? In a nutshell: really well! Across the 5-fold cross-validation, our model achieved an impressive Overall Accuracy (OA) of 96.03%. That means, on average, it correctly identified the plant’s condition (healthy or which disease) over 96% of the time! It also showed strong Average Accuracy (AA) at 94.78% and a Kappa coefficient of 95.86%, which are important metrics showing the model is balanced and reliable across different disease categories, not just good at the most common ones.

How does that stack up against others? We compared it to some well-known CNNs like ResNet50 and GoogleNet. RepLKNet showed competitive or even superior performance, proving that its architectural design, especially those large kernels, gives it an edge in this task. What’s more, the performance was very stable across all five folds of testing, with minimal variation. This tells us the model is robust and dependable.

Why Big Kernels Win (The Proof is in the Pudding!)

To really confirm that the large kernels were making the difference, we did an “ablation study.” This is where you remove or change a key part of your model to see how it affects performance. We replaced the large 31×31 kernels in RepLKNet with smaller, more traditional 3×3 or 5×5 kernels and re-ran the tests. The results were clear: using the smaller kernels led to a drop in accuracy – up to 1.1% in OA and 1.3% in AA! This is solid evidence that those big kernels are crucial for capturing the complex patterns needed for accurate plant disease recognition.

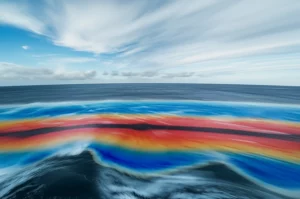

Seeing What the Model Sees

Want to see *how* the model is making its decisions? We used a cool technique called Grad-CAM to generate heatmaps. These heatmaps show which parts of the image the model paid the most attention to when deciding on a diagnosis. Warmer colors mean higher attention.

When we applied Grad-CAM to images of diseased leaves (like peach, bell pepper, and grape), the heatmaps showed the model’s attention (the red and yellow areas) was heavily concentrated right on the visible disease symptoms – the spots, discolorations, and lesions. This is fantastic! It confirms that RepLKNet is effectively learning to focus on the actual signs of illness. And because of its large kernels, it’s able to look at a broader area, capturing the full extent and pattern of the disease, which smaller kernels might miss or only see piecemeal.

What’s Next? (Always Room to Grow!)

Now, while this is super exciting progress, there’s always room for improvement. Like any research, our method has limitations. For instance, while our dataset is big, the world of plant diseases is *huge*. The model might struggle with diseases or plant types it hasn’t seen before. Also, our simple image cropping method might accidentally cut off important symptoms near the edges of an image. And while the model is accurate, making it fast enough to run instantly on a phone or drone in a field for real-time detection is still a challenge.

Future work will definitely need to address these points. Maybe using even larger and more diverse datasets, exploring smarter ways to preprocess images (like focusing on the diseased areas automatically), or developing hybrid models that combine the strengths of large kernels with other techniques could help. And making the models lighter and faster for practical use in the field is a big goal.

The Big Picture

So, what’s the takeaway? Our study shows that RepLKNet, with its innovative use of very large convolutional kernels, is a seriously powerful tool for automated agricultural disease recognition. It achieves high accuracy, is robust, and its architecture is particularly well-suited for capturing the complex, often large-scale patterns of plant diseases. This is a significant step forward in using AI to help protect crops, improve yields, and contribute to global food security. It’s pretty cool to think that clever network design can make such a real-world difference!

Source: Springer