Unlocking Rare Thyroid Cancer Diagnosis with AI: The Tiger Model Story

Alright folks, let’s dive into something pretty cool and super important happening in the world of medical AI. We’re talking about tackling a real challenge: diagnosing those sneaky, rare types of thyroid cancer using ultrasound images.

The Big Problem with Rare Diseases and AI

You see, AI is getting seriously good at looking at medical images and helping doctors figure things out. It’s like having a super-powered assistant. But here’s the catch: AI models learn from data. Lots and lots of data. And when you’re dealing with rare diseases or specific rare subtypes of a common disease, well, the data is just… scarce. It’s like trying to teach someone about a specific type of exotic bird when you’ve only shown them pictures of pigeons.

In the case of thyroid cancer, the most common type, Papillary Thyroid Carcinoma (PTC), has plenty of examples for AI to learn from. But there are rarer subtypes like Follicular Thyroid Carcinoma (FTC), Medullary Thyroid Carcinoma (MTC), and Anaplastic Thyroid Carcinoma (ATC) that don’t show up nearly as often. This means AI models trained on standard datasets often struggle to recognize them. They might miss them entirely or mistake them for something else, leading to misdiagnosis or delayed treatment. And honestly, that’s just not fair to the patients dealing with these conditions. It’s a significant barrier to making medical AI truly equitable and widespread.

Traditional ways of boosting data, like just rotating or flipping images, don’t really help capture the unique features of these rare conditions. You need something smarter.

Introducing the Tiger Model: A Text-Guided Game Changer

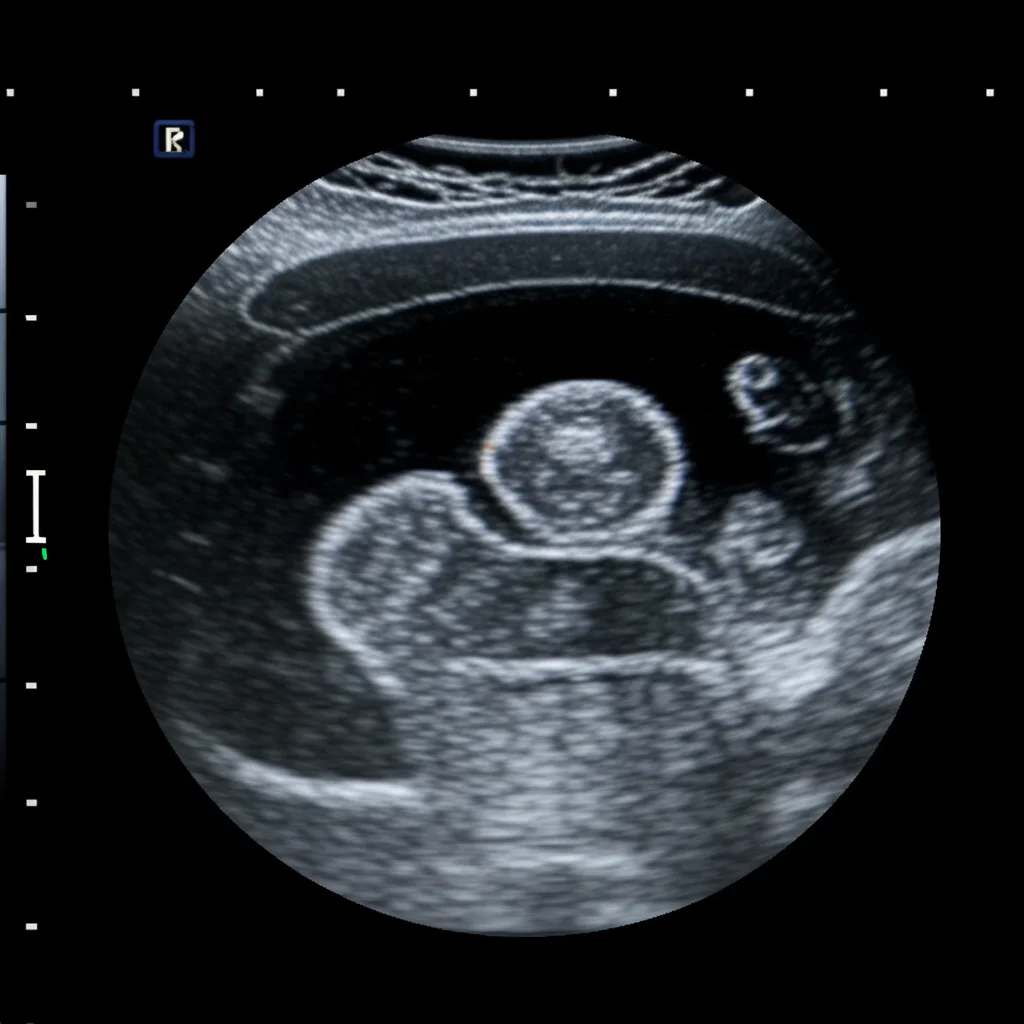

So, what do we do when we don’t have enough real-world examples? We get creative! Our team came up with a neat idea: what if we could *generate* realistic, synthetic medical images of these rare subtypes? But not just random images – images guided by the detailed knowledge doctors have about what these rare cancers actually look like on an ultrasound.

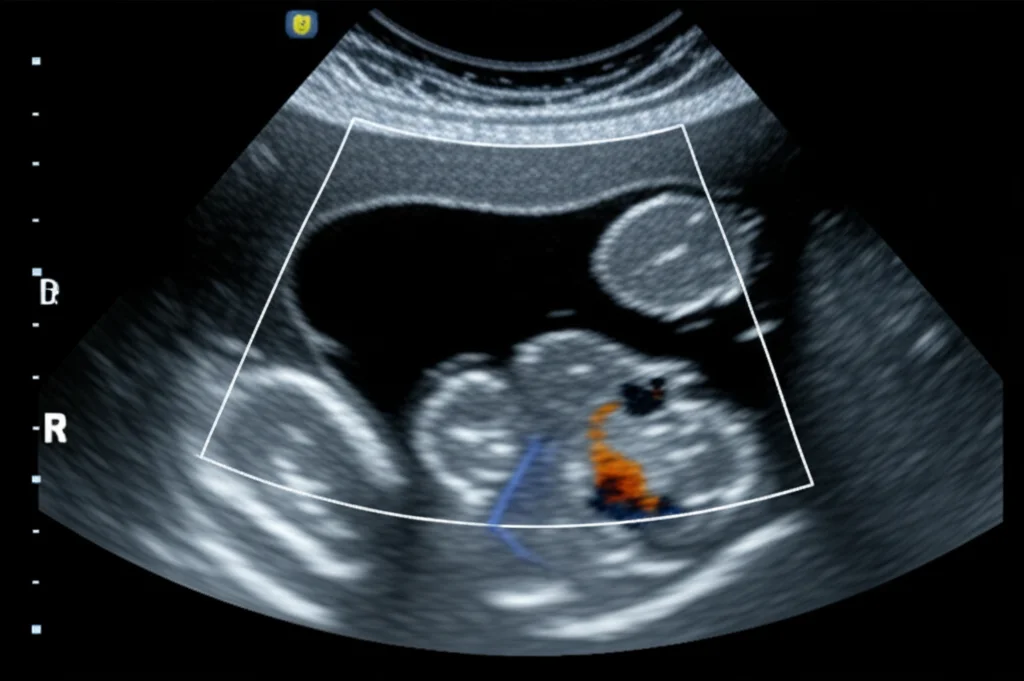

That’s where the **Tiger Model** comes in. Think of it as a special kind of AI that can create images based on text descriptions. We fused clinical insights – all those specific details about how a rare thyroid nodule might appear (its shape, texture, edges, location, etc.) – with powerful image generation technology, specifically diffusion models.

The goal? To produce synthetic ultrasound images that are so realistic and diverse, they can effectively teach AI models to spot these rare subtypes, even when real-world data is limited.

How We Taught the Tiger to Generate Images

Building the Tiger Model wasn’t just about throwing images and text together. We had to be smart about it.

First, we built a detailed knowledge base. We poured over medical literature and clinical reports to figure out the key differences in imaging features between common and rare thyroid nodules. Things like:

- Composition

- Echogenicity (how bright it appears)

- Echotexture (its internal pattern)

- Calcification (if it has calcium deposits)

- Shape and Margin (its outline)

- Location within the thyroid

- And more!

We summarized these features, noting what’s common and what’s unique to the rare types.

Then, we trained the Tiger Model in stages. It first learned the general characteristics of thyroid ultrasound images from a large dataset of common cases. This gave it a solid foundation. After that, we guided it using our detailed text descriptions to specifically generate the *unique* features of the rare subtypes. By combining these unique rare features with variations of common features (since rare nodules often share some characteristics with benign or common ones), we could synthesize images that were both realistic and diverse.

A clever part of the Tiger Model is how it handles the image. It uses separate components (encoders) to focus on the nodule itself (the “foreground”) and the surrounding thyroid tissue and neck structures (the “background”). This allows for really fine-grained control over the generated image details, making them much more convincing and clinically accurate. We used things like segmentation masks to tell the model exactly where the nodule is and edge detection (like a digital sketch) for the background structure.

The training data for the Tiger Model included a massive collection of ultrasound images and their corresponding text reports. We’re talking over 40,000 patients and nearly 190,000 images! Even with this large dataset, the rare subtypes were still, well, rare.

Once the Tiger Model was trained, it could generate augmented datasets. We then combined these synthetic images with the real ones to train standard classification models – the kind that actually make the diagnosis.

Putting the Tiger to the Test

Okay, generating images is one thing, but are they any good? Do they actually help AI diagnose better? We ran a bunch of tests to find out.

We evaluated the quality of the generated images using several technical metrics. Compared to other generative methods like Stable Diffusion, the Tiger Model’s images were significantly better at preserving structural information, were more realistic, and showed greater diversity. This means they weren’t just spitting out slightly different versions of the same limited rare examples; they were creating genuinely new, plausible variations.

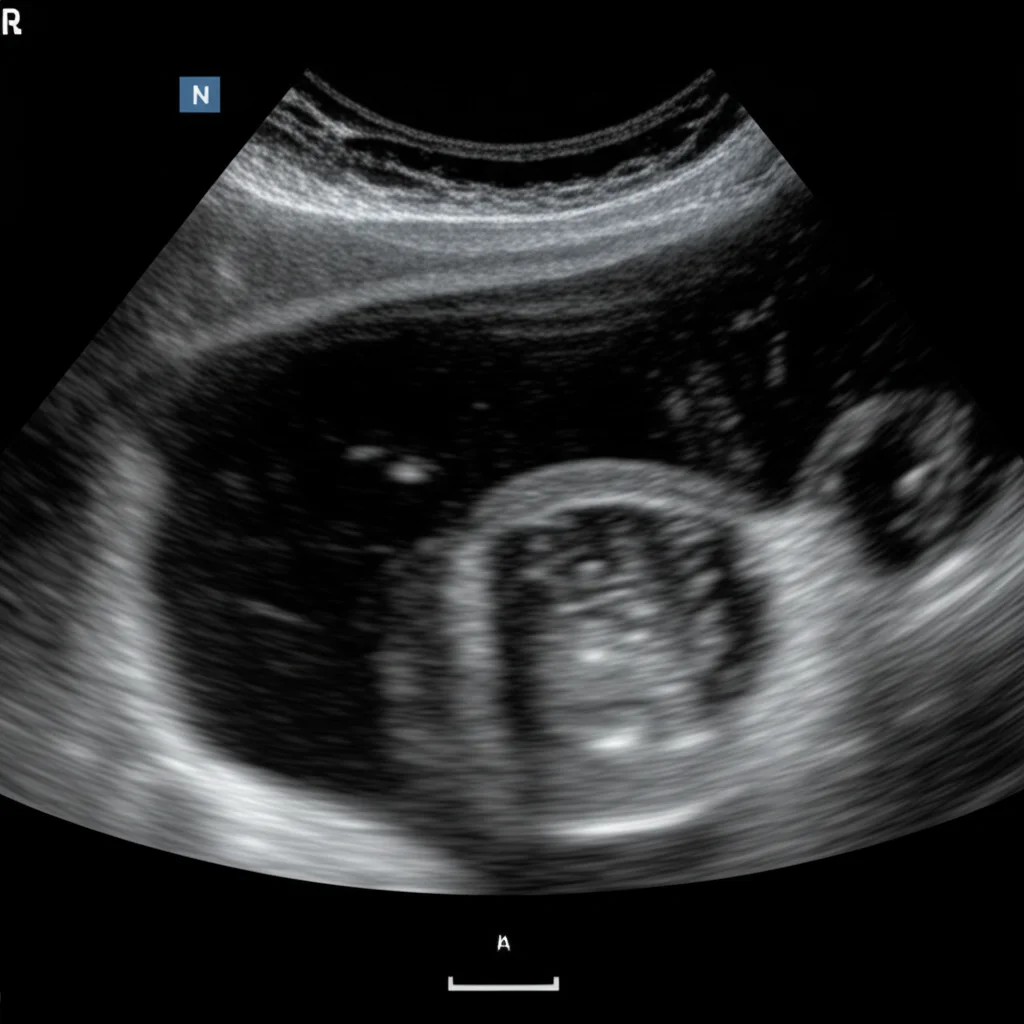

But the real test? Asking the experts! We conducted “Turing tests” with 50 experienced ultrasound physicians.

In the first test, doctors were shown images (real or generated) and asked to tell if they were fake. Doctors were fooled by the Tiger Model’s images over 92% of the time, while images from other methods were correctly identified as fake much more often (only fooling doctors about 34% of the time). That’s a pretty strong endorsement of the realism!

In the second test, doctors had to pick the correct image corresponding to a text description from a set of four. This tested if the generated images accurately reflected specific features, especially for rare subtypes. The Tiger Model’s images resulted in significantly higher accuracy rates from the doctors compared to images from other methods. Junior doctors saw over a 31% improvement compared to Stable Diffusion, and senior doctors saw over a 37% improvement!

The third test asked doctors to identify specific radiological features within the generated images. Again, the Tiger Model’s images were highly accurate in representing these features, closely matching the accuracy seen with real images.

These tests, both the technical metrics and the human evaluation by doctors, showed that the Tiger Model generates images that are highly realistic, diverse, and clinically meaningful.

The Impact: Better Diagnosis for Rare Cancers

So, does this translate into better diagnosis? Absolutely! We trained AI classification models using the augmented datasets created by the Tiger Model and compared their performance on diagnosing rare thyroid cancer subtypes (FTC and MTC).

The results were super encouraging. Using the Tiger Model’s feature-guided augmentation (Tiger-F) led to a substantial increase in diagnostic accuracy metrics like AUC (Area Under the Curve), sensitivity (correctly identifying malignant cases), and specificity (correctly identifying benign cases) for both FTC and MTC, compared to models trained without this augmentation or with traditional methods. For FTC, we saw an AUC increase of over 14%!

We also tested how performance changed as we added more generated data. While other methods saw performance plateau or even drop after adding a certain amount of synthetic data (likely because their generated images lacked diversity), the Tiger Model showed a more stable and sustained improvement as more data was added. This highlights the importance of generating *diverse* samples, not just more samples.

Importantly, we found that adding Tiger Model-generated data to the training set resulted in diagnostic performance for rare subtypes that was very close to what you’d get if you could magically add an equal number of *real* rare images. Since getting real rare images is incredibly hard, this is a huge win!

And it’s not just thyroid cancer! We tested the Tiger Model’s ability to generalize by applying it to other rare cancer types in different imaging modalities – rare breast cancer subtypes (like ILC and PCB) using ultrasound and even pediatric chest X-rays for rare findings like Bronchopneumonia. In both cases, the Tiger Model-augmented datasets led to improved diagnostic performance compared to other methods. This suggests the approach is broadly applicable to tackling data scarcity in medical imaging for various rare conditions.

Why This Matters Clinically

Improving the diagnosis of rare thyroid cancer subtypes is a big deal. Currently, standard procedures often follow guidelines for the common types, which might not be ideal for rarer, more aggressive forms like MTC that are prone to metastasis. More accurate pre-diagnosis could lead to more targeted treatments, potentially improving patient outcomes. Ultrasound is already a preferred, cost-effective method for evaluating thyroid nodules, so boosting its diagnostic power, especially for rare cases, is clinically significant.

The Tiger Model’s ability to generate realistic, diverse images based on detailed clinical descriptions directly addresses the core problem of sample scarcity and lack of representativeness for rare subtypes. It essentially creates a richer training ground for AI.

Beyond just improving AI models, the Tiger Model has the potential to be a valuable tool for doctors. By generating images that visualize specific features based on text descriptions, it can help clinicians better understand the subtle differences between similar-looking nodules, particularly in rare cases they might not encounter often. It could even be used as a training tool!

Looking Ahead

Of course, no research is without its limitations. Our study focused mainly on thyroid cancer, and while we showed generalization, applying it to a wider range of rare diseases and patient demographics (different ages, genders, ethnicities) is the next step. We also need to dig deeper into the exact relationship between the amount of generated data and the boost in predictive accuracy. And yes, sometimes the generated medical images still have minor quality quirks – medical imaging is tricky!

But the potential is massive. The Tiger Model offers a promising way to create high-fidelity, clinically relevant synthetic data for rare conditions where real data is hard to come by. This could lead to more accurate and reliable AI diagnostic tools, ensuring that patients with rare diseases, including those from minority populations, have more equitable access to the benefits of advanced medical AI. It’s about making sure no rare case gets left behind.

Source: Springer