Seeing Clearly: How OBoctNet’s Smart AI Boosts Eye Scan Analysis

Hey there! Let’s chat about something pretty cool happening in the world of eye care and artificial intelligence. You know how important those eye scans (called OCT scans) are for spotting all sorts of eye issues? Traditionally, brilliant doctors have to pore over these images, manually looking for tiny clues – what we call “biomarkers” – that signal a problem. It’s precise work, but boy, is it time-consuming!

Now, with the explosion of medical imaging, especially in ophthalmology, folks have been turning to deep learning, a fancy type of AI, to lend a hand. The idea is to speed things up and make diagnosis even more accurate. Sounds great, right? But here’s the snag: these powerful AI models usually need *tons* of perfectly labeled data to learn from. And getting medical experts to meticulously label thousands upon thousands of scans? That’s a massive, expensive, and slow job. It’s like trying to teach a student with only a handful of textbooks!

That’s where something truly neat comes in. I want to tell you about a new approach called **OBoctNet**. Think of it as a super-smart assistant for eye doctors, designed specifically to tackle that data problem head-on.

Why Eye Biomarkers Are a Big Deal

Okay, first off, what exactly are these “ophthalmic biomarkers”? Basically, they’re little indicators – measurable signs – in your eye that tell us something about its health, whether it’s happy and healthy or showing signs of trouble. You can find them in things like blood, tears, or the eye tissue itself, often spotted using imaging tech like those OCT scans we talked about.

Spotting these biomarkers is *crucial* for managing serious conditions like diabetic retinopathy (DR) and diabetic macular edema (DME). If you or someone you know has diabetes, you know these are major concerns. We’re talking about conditions that affect millions globally, and sadly, the numbers are only expected to grow.

This is where computer science swoops in like a superhero. By using machine learning to automate the analysis of OCT scans, we can potentially handle the huge volume of scans coming in, identify those critical biomarkers reliably without needing endless manual annotations, and help doctors manage these chronic conditions more effectively. It could even pave the way for new treatments!

The AI Landscape: Great Strides, Stubborn Hurdles

Lots of smart people have been working on using AI for eye biomarker detection, and they’ve made some fantastic progress in boosting accuracy and speed. They’ve tried different techniques to get around the limited data issue.

* Some have used *contrastive learning*, where the AI learns by comparing images, but this can be tricky if the clinical signs don’t perfectly match the biomarkers, or if the biomarkers are really tiny or spread out.

* Others have explored *self-supervised learning (SSL)*, training the AI on unlabeled data first before fine-tuning it. This can work well, but it often needs *huge* datasets and a lot of computing power, which isn’t always practical in a clinical setting.

* Then there are the straight-up *fully supervised* methods. These are super accurate *if* you have massive, perfectly labeled datasets. But again, getting those labels is the bottleneck. Plus, models trained this way can sometimes struggle to work well on scans from different machines or different groups of patients.

* Some binary models are great at telling a healthy eye from a sick one but don’t necessarily pinpoint *which* biomarker is present, and they can be a bit of a “black box” – you don’t always know *why* they made a certain decision.

Many of these existing methods also struggle with the fact that medical datasets are often small and prone to the AI “overfitting” (becoming too specialized to the training data and failing on new scans). And crucially, they often lack *interpretability*. Doctors need to trust the AI, and that means understanding *how* it reached its conclusion. This is where “Explainable AI” comes in, but it’s often missing from these models.

So, yeah, big steps have been taken, but there’s definitely a need for AI that’s accurate, works with less labeled data, and can explain itself.

OBoctNet: The Smart, Semi-Supervised Solution

This is where OBoctNet steps onto the scene. It’s a *novel semi-supervised* approach. What does that mean? Instead of needing *everything* labeled (fully supervised) or learning mostly on its own (self-supervised), it cleverly uses a *small* amount of labeled data and then figures out how to make the best use of the *much larger* amount of unlabeled data.

The secret sauce is a **two-stage training strategy** powered by **active learning**. Imagine the AI looking at all the unlabeled scans and saying, “Hmm, I’m not quite sure about *this* one. This looks like a really informative example! Let’s get a doctor to label *this* specific scan, and I’ll learn a lot from it.” That’s active learning – the AI actively selects the most valuable data points to learn from, rather than passively accepting whatever it’s given. This is brilliant for datasets like the OLIVES one they used, where only a tiny fraction (12%) is initially labeled. It helps the AI focus its learning where it’s needed most.

OBoctNet doesn’t just guess; it uses a smart filtering system and measures its own uncertainty (using something called entropy) to decide which unlabeled scans it’s confident enough to “pseudo-label” (assign a temporary label based on its current knowledge) and which ones are too ambiguous and should be flagged for expert review. This iterative process means the AI gets smarter and its pseudo-labels get more reliable over time.

On top of this clever learning strategy, OBoctNet has a robust “brain” for analyzing the images. It uses a special kind of network called **CSPResNet50** (a souped-up version of the classic ResNet-50). This network is great at extracting detailed features from the OCT scans efficiently, making sure it doesn’t miss those subtle biomarker clues while also being computationally smart.

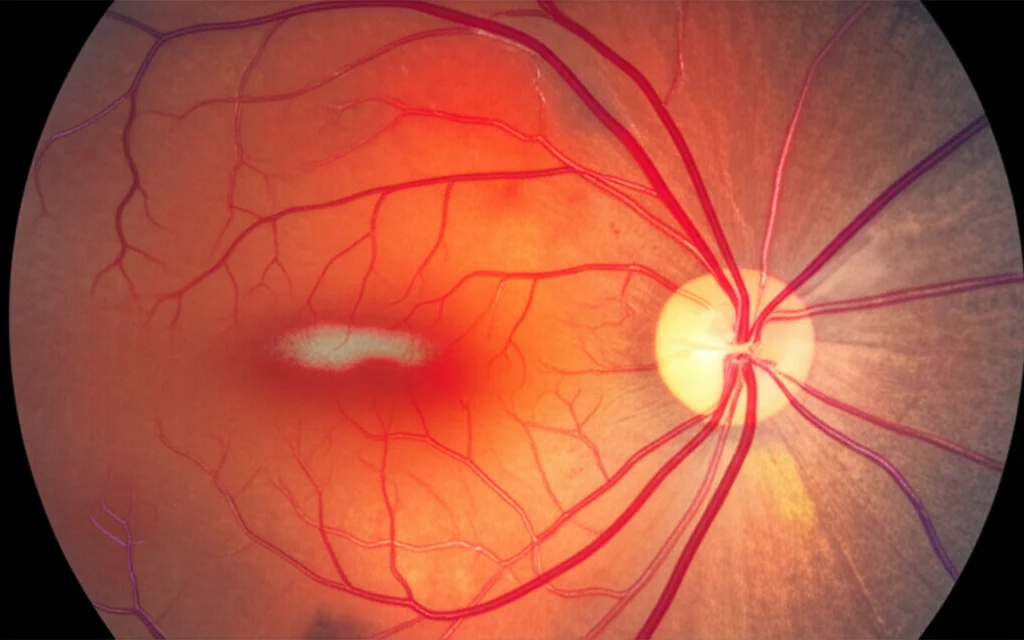

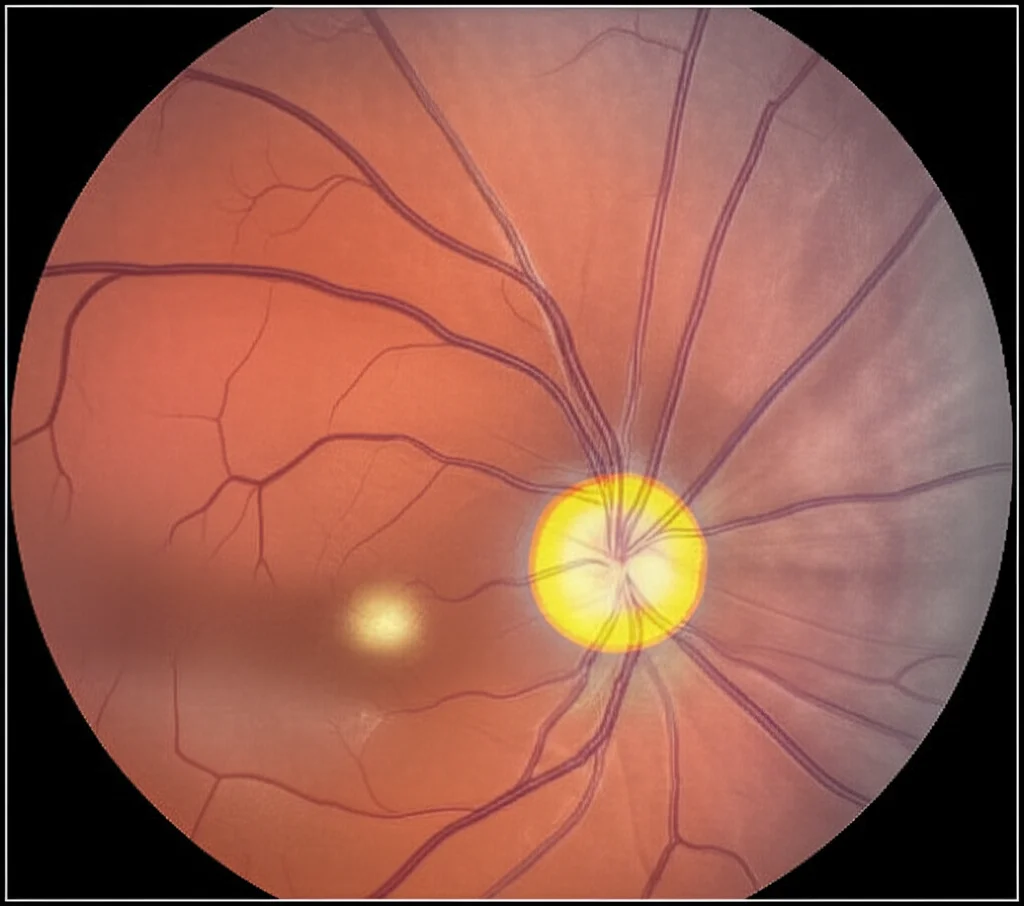

And remember that interpretability issue? OBoctNet integrates **GradCAM (Gradient-weighted Class Activation Mapping)**. This is the Explainable AI part. GradCAM creates heatmaps on the OCT scans, showing exactly *which* areas of the image the AI looked at to make its decision about a specific biomarker. This isn’t just cool; it’s vital for doctors. They can see *why* the AI thinks a biomarker is present, building trust and helping them validate the AI’s findings.

So, in a nutshell, OBoctNet is designed to:

- Work effectively with limited labeled data.

- Improve accuracy by intelligently using unlabeled data via active learning.

- Efficiently extract features from OCT scans using a specialized network.

- Show *why* it made a decision using Explainable AI (GradCAM).

This makes it a much more practical and trustworthy tool for clinical use.

Putting the System Together

The whole process is a bit like a well-oiled machine. It starts with the dataset (the OLIVES dataset, which is awesome because it’s large, has multi-label biomarkers, and even tracks patients over time – way better than many others!). The scans get prepped (resized, enhanced, etc.). Then, OBoctNet is initially trained on the small batch of labeled data.

After that first training round, the active learning loop kicks in. The model looks at the unlabeled scans, makes predictions, and identifies the ones it’s most uncertain about or most confident about. The high-confidence ones get pseudo-labels and are added to the training pool. The model then retrains on this expanded dataset. This cycle repeats, with the model getting better and better as it learns from more and more (initially unlabeled) data that it has intelligently selected and labeled itself.

The study carefully split the data to make sure the testing was fair, especially because the dataset includes scans from the same patients over time. They used powerful GPUs (like the NVIDIA Tesla P100) and smart optimization techniques (like the AdamW optimizer and mixed precision training) to make the training process efficient, even though deep learning can be computationally intensive.

Measuring Success: The Numbers Game

How do you know if this OBoctNet is actually *good*? You measure its performance using standard metrics. They looked at:

- Accuracy: How many predictions (both positive and negative) were correct overall.

- Precision: Out of all the times the model said a biomarker was present, how often was it right? (Important to avoid false alarms).

- Recall (Sensitivity): Out of all the times a biomarker was *actually* present, how often did the model catch it? (Important to avoid missing problems).

- F1 Score: A balance between Precision and Recall – super useful when some biomarkers are rarer than others.

- AUC (Area Under the ROC Curve): A measure of how well the model distinguishes between the presence and absence of a biomarker.

- AP (Average Precision): Related to the Precision-Recall curve, useful for imbalanced datasets.

They compared OBoctNet against several other top-notch AI models (like different versions of ResNet and MobileViT) on the task of identifying *six* specific ophthalmic biomarkers.

The Results Are In!

And guess what? OBoctNet really shined!

* It consistently showed the **highest overall accuracy** compared to the other models tested.

* Looking at individual biomarkers, OBoctNet achieved the **highest F1 scores for five out of the six** biomarkers. We’re talking performance gains of several percentage points over models like ResNet-50 – that’s a significant jump in diagnostic capability!

* The study showed how the **active learning process improved performance over iterations**. As the model intelligently selected and incorporated more pseudo-labeled data, both accuracy and the F1 score steadily increased, proving that this active learning strategy works wonders with limited initial labels.

* The **ROC and PR curves** also looked great, especially for certain biomarkers, confirming OBoctNet’s strong ability to differentiate between cases with and without the biomarkers.

They also looked at computational efficiency. OBoctNet was faster at inference (making a prediction on a new scan) than some other models, which is important for real-time use in a clinic.

Peeking Inside the Black Box with GradCAM

To show off the Explainable AI part, they used GradCAM to generate heatmaps on sample OCT scans. These heatmaps visually highlight the specific regions in the scan that the OBoctNet model focused on when identifying different biomarkers. This is incredibly valuable for doctors – they can see if the AI is looking at the right anatomical structures or pathological signs, building confidence in the AI’s predictions and helping them confirm the diagnosis. It turns the “black box” into something a lot more transparent.

What Makes OBoctNet Tick? The Ablation Study

To really understand which parts of OBoctNet were doing the heavy lifting, they did an “ablation study.” This is like taking apart a machine to see what each piece does. They tested the model with different components removed:

- Without active learning

- Without the confidence-based filtering for pseudo-labeling

- Without GradCAM (though this doesn’t affect accuracy, only interpretability)

- Replacing the special CSPResNet50 backbone with a standard ResNet50

The results were clear: **Active learning and the CSPResNet50 backbone were the most critical components**. Removing active learning significantly dropped performance, proving its power in using unlabeled data. Swapping out the backbone also hurt accuracy, showing the CSPResNet50’s efficiency in feature extraction. The confidence filtering helped refine the pseudo-labels, contributing to better accuracy. GradCAM, as expected, didn’t change the accuracy but was essential for explaining the results.

Looking Ahead

Now, it’s not all sunshine and rainbows just yet. The study acknowledges some limitations. The quality of the pseudo-labels generated by the AI is super important – if the initial pseudo-labels are wrong, it can mess things up. Also, picking the perfect settings (hyperparameters) for the active learning and filtering needs careful tuning. And while OBoctNet is efficient, getting it ready for *real-time* use in every single clinic might need more optimization.

But honestly, the potential here is huge! This study doesn’t just show off a cool new model; it lays the groundwork for future AI in eye care. The next steps involve working even more closely with ophthalmologists to get super-detailed annotations (like drawing outlines around the biomarkers) to make the evaluation even more precise. They also want to integrate even more advanced Explainable AI techniques to make the AI’s decisions even easier to understand.

Imagine a future where AI like OBoctNet can quickly and accurately scan OCT images, flag potential biomarkers, and show the doctor exactly *why* it thinks they’re there, all while needing less initial labeled data. That would be a game-changer for diagnosing and managing eye diseases, helping doctors help more people see clearly.

Source: Springer