LLM4TDG: AI’s Next Leap in Smarter Software Testing

Hey there! Let me tell you about something pretty cool happening in the world of software development and testing. You know how we build software these days? It’s not usually about writing everything from scratch anymore. We’re constantly reusing components, grabbing handy bits of code and libraries that other folks have already built. It’s super efficient and saves a ton of time and money.

But here’s the rub: sometimes, we don’t *really* know what’s inside those reused pieces. And getting them to play nicely together, especially when it comes to testing, can be a real headache. These components aren’t always built to run on their own, which makes testing the whole system a challenge. This gap between complex software architectures and the need for accurate, automated testing is a big deal, and frankly, it’s leading to more security issues in the software supply chain. We’ve seen some scary stuff happen globally because of vulnerabilities in these dependency libraries. Attacks targeting these are low-cost and high-impact, spreading like wildfire.

Manual testing? It’s slow, expensive, and let’s be honest, prone to missing things. Automated methods are better, but they often lack the flexibility or accuracy needed for these complex, interconnected systems. Getting a program to do exactly what you want, especially in testing, has always been a tough nut to crack. Traditional program synthesis techniques have been around, but they often involve complex constraint solving that’s not exactly intuitive.

Then came the big language models, the LLMs! These AI powerhouses got really good at understanding and generating human language, and guess what? They started getting pretty decent at code too. Models like CODEX and CodeGen showed us the potential. But even with their code-generating chops, they still struggle with accuracy, especially when the requirements get tricky or involve specific constraints. That’s where the idea for **LLM4TDG** comes in.

Enter LLM4TDG: The Smart Testing Framework

So, what is **LLM4TDG**? It stands for “LLM for Test-Driven Generation.” It’s a fancy name for a framework that uses Large Language Models to generate tests, but with a twist. The core idea is to make LLMs much better at understanding the *rules* or *constraints* that a test needs to follow.

Imagine you’re writing a test. You have requirements, documentation, maybe some example code. These all imply rules about how the code should behave, what inputs are valid, what outputs to expect. LLMs are good at reading this stuff, but translating it into a concrete, runnable test that *strictly* follows all those rules? That’s harder.

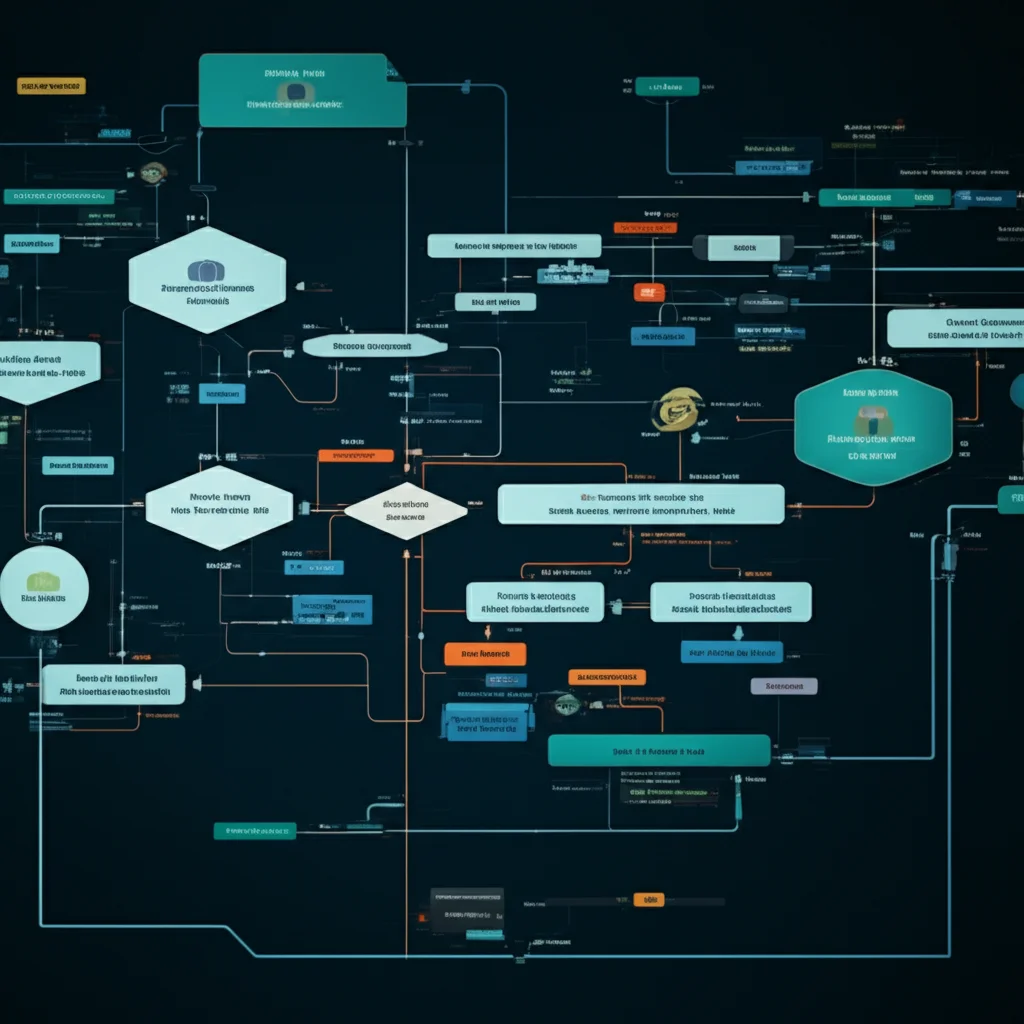

LLM4TDG tackles this by formally defining these rules as a **constraint dependency graph**. Think of it as a map showing how different requirements and pieces of information are connected and depend on each other. By converting this map into “context constraints,” the framework helps the LLM really *get* what the test needs to do.

Then, it uses something called **constraint reasoning** and **backtracking**. This is like the LLM trying to solve a puzzle based on the constraint map. If it generates a test piece that doesn’t fit the rules, it can figure out *why* and try a different approach, iterating until it gets it right. This process automatically generates test drivers that satisfy all those defined constraints.

What I find really cool is that it doesn’t just stop there. It also uses feedback from *running* the test. If the test code has errors (syntax or semantic), the framework uses that feedback to go back, adjust the constraints, and try generating the code again. It’s an iterative process aimed at making the generated test code as correct and functional as possible.

How It Works, Under the Hood

Let’s dive a little deeper into how this magic happens. The **LLM4TDG** framework has three main parts:

- Fundamental Environment Analysis: Before you can run a test, you need the right environment. This module figures out what dependencies the code needs, what versions, and sets up a virtual, isolated environment (like using `virtualenv` in Python) so everything runs smoothly without messing up your main system. It even uses clever tricks like analyzing the code’s Abstract Syntax Tree (AST) to find import statements and figure out dependencies automatically, even if there’s no standard installation script.

- Test Case Writing: This is where the LLM does its thing. It takes all the inputs – test requirements, API documentation, function signatures, etc. – and, guided by the framework, extracts the syntactic and semantic constraints. It uses these constraints, along with a test case template, to generate the initial test code. The constraints are categorized (like library, module, requirement, API, syntax error, semantic error) to give the LLM clear guidance.

- Test-Driven Reconstruction: This is the iterative part. The generated test code is run. If it fails or hits errors, the runtime feedback (error messages, output) is captured. This feedback becomes *new* constraints. The framework uses the constraint dependency graph and a process similar to Conflict-Driven Clause Learning (CDCL) – a method often used for solving complex logical problems – to analyze the errors, locate the problem spots in the test code, and guide the LLM to fix them. It keeps iterating, feeding back error information, until the test code is correct and satisfies the constraints. It’s like a smart debugging loop powered by AI and constraint reasoning.

The **constraint dependency graph** is key here. It visually maps out how variables and requirements are linked. For example, if variable `A`’s value depends on the result of a function call, and that function call has specific input constraints, the graph captures that. This helps the LLM understand the ripple effects of changes and ensure all related constraints are met. The framework formally defines different types of dependencies, like Data Dependency, Control Dependency, and Function Dependency, to build this graph.

The constraint reasoning process, enhanced by LLMs, helps translate natural language requirements into formal constraints and then uses the graph to find solutions. When a conflict arises (a constraint is violated), the system can backtrack, learn from the conflict (like CDCL does), and try a different path to find a valid solution.

Putting It to the Test

Okay, so the idea sounds solid, but does it *actually* work better than just asking an LLM to write a test? The researchers put **LLM4TDG** through its paces using some serious benchmarks.

They used the **EvalPlus** dataset, which is known for being tough and thorough. It takes the standard HUMANEVAL benchmark (which has manually written tests) and cranks it up by automatically generating a *lot* more test cases (nearly 80 times more!) using mutation methods. This makes it much harder for an LLM to pass a test just by getting the simple cases right; it really has to understand the problem deeply.

They also tested it on a real-world scenario: detecting malicious behavior in Python dependency libraries from the **pypi_malregistry** dataset. These are libraries that have been intentionally injected with harmful code, sometimes using sneaky techniques to hide it. Since these aren’t executable on their own, you need a test driver to trigger the malicious behavior. This was a great way to see if **LLM4TDG** could generate tests effective in a practical, security-focused context.

To measure success, they looked at a few things:

- Constraint Reasoning Effectiveness: Did using the constraint graph actually help the LLM understand and satisfy the rules? They measured a “Constraint Gain Ratio” (CGR).

- Error Fixing Capability: How good was the framework at fixing syntax and semantic errors in the generated code through its iterative reconstruction process? They used metrics like Net Problem Solving Rate (NPR) and Iteration Efficiency Rate (IER).

- Functional Correctness: Did the generated tests actually *work* and correctly check the functionality of the code being tested? They used the improved `pass@k` metric, which is a standard way to evaluate code generation models.

What the Results Show

And the results? Pretty darn exciting!

First off, on the constraint understanding front, **LLM4TDG** made a significant difference. For tasks where the constraints weren’t fully met without the framework, using the constraint reasoning boosted the satisfaction rate for 70 out of 147 tasks, giving a **Constraint Gain Ratio of 47.62%**. That’s a huge jump in the LLM’s ability to grasp the specific rules of a test! Overall, the number of constraints passed across all tasks was significantly higher with the framework.

When it came to fixing errors, the framework also showed its strength. The number of test programs that needed fixing was much lower when constraint reasoning was used, meaning the initial code generated was better. And the error-fixing capability itself, measured by EFC, was quite high, with models like GPT-4 achieving over 87%. Interestingly, general-purpose LLMs sometimes outperformed code-specific ones in fixing these errors, suggesting that understanding the *context* of errors is crucial.

But perhaps the most compelling result is the improvement in **functional correctness**, measured by `pass@k`. Using **LLM4TDG** significantly improved the average pass rate of *all* the tested LLMs by **10.41%**. One model, CodeQwen-chat, saw its pass rate jump by a whopping **18.66%**. Even more impressively, the framework helped models surpass the state-of-the-art GPT-4’s performance on the challenging HUMANEVAL and HUMANEVAL+ benchmarks, reaching **92.16%** and **87.14%** respectively. This means the tests generated were much more accurate and reliable.

They also validated the framework in the real-world security testing scenario. By generating tests for potentially malicious Python libraries, **LLM4TDG** was able to create executable tests that correctly triggered and detected the malicious behavior, something that was much harder or impossible without the constraint reasoning. This shows the framework’s potential for practical applications beyond just standard functional testing.

Of course, they also looked at the cost. Adding the dynamic feedback and iterative reconstruction means the process takes longer and uses more computational resources (measured by tokens and time) compared to just generating code once. But the trade-off is significantly higher accuracy and reliability.

The Nitty-Gritty: Limitations and What’s Next

Now, it’s not perfect yet. As the researchers point out, constraint reasoning with LLMs is still pretty new. The results can depend on the specific LLM used, the quality of the data it was trained on, and how complex the test requirements are. Sometimes, if the requirements are super complicated, the LLM might still struggle to build an effective constraint graph. Also, as I mentioned, the iterative fixing process adds time.

But the potential? Huge! The folks behind **LLM4TDG** see this going beyond just automated testing. They think it could be used for requirement-driven code generation (writing code directly from descriptions), automated code repair, and even in low-code development platforms. Imagine an AI that can not only understand what you want but also generate the code *and* the tests for it, automatically fixing issues along the way. They’re even thinking about integrating it with visual design tools (like UI or UML diagrams) to make the whole software development process more automated and efficient from design to testing.

Wrapping Up

In a nutshell, **LLM4TDG** is a really promising step towards making software testing smarter, more accurate, and more automated, especially in today’s world of complex, reused components. By teaching LLMs to understand and reason about constraints, it significantly boosts their ability to generate reliable test code and even helps find hidden issues like malicious behavior. It’s a great example of how we can leverage the power of AI to tackle some of the trickiest challenges in software development, reducing manual effort and improving quality and security. Definitely something to keep an eye on!

Source: Springer