Saving History, One Brick at a Time: How AI and Drones are Protecting the Great Wall

Guarding Giants: The Challenge of Ancient Walls

Hey there! So, imagine trying to keep an eye on something absolutely massive, something that stretches for thousands upon thousands of miles across mountains, deserts, and all sorts of wild places. That’s pretty much the daily challenge when you’re looking after ancient city walls, especially something as epic as the Great Wall of China. It’s not just a wall, you know? It’s a symbol, a piece of history that blows your mind with its sheer scale and the effort that went into building it.

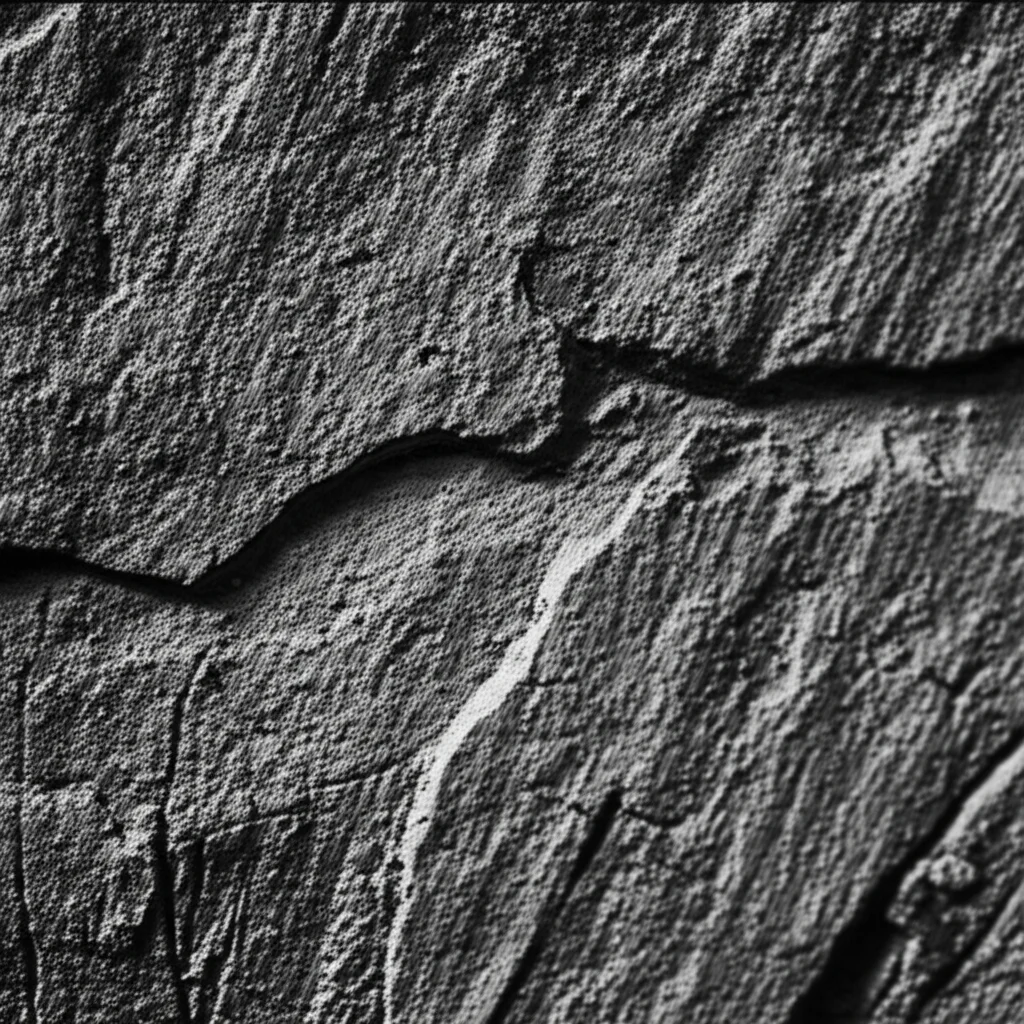

But here’s the thing: time, weather, and even just people being around take their toll. These incredible structures, built centuries ago, are constantly battling the elements. We’re talking about bricks falling out, walls crumbling, and defensive forts losing their structural integrity. And because the Great Wall is *so* big and often in really remote, hard-to-get-to spots, checking up on it the old-fashioned way – with people walking along and looking – is practically impossible. It’s slow, it costs a fortune, and frankly, it can be pretty risky. Think about trying to spot a missing brick on a fort perched on a steep mountainside!

The traditional way of doing things, basically a manual visual check, just doesn’t cut it for daily monitoring and maintenance on this kind of scale. You end up with delayed interventions, and before you know it, small problems become big ones. We desperately needed a faster, more efficient way to find and pinpoint exactly where the damage is happening.

Enter the Machines: A High-Tech Solution

This is where modern technology swoops in to save the day! We’re talking about the cool stuff like computer vision and unmanned aerial vehicles (UAVs), or as you probably know them, drones. These tools have gotten seriously good lately, and they’re starting to make a real difference in fields like civil engineering and even manufacturing.

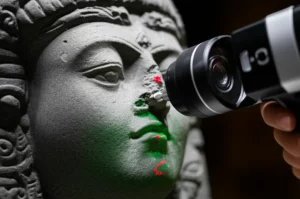

Why drones? Well, they can cover huge areas quickly and capture super high-resolution images, even in those tricky, inaccessible spots. And why computer vision? Because it can analyze those images with incredible accuracy, often better than a human eye scanning for hours. It’s flexible, it works in real-time, and it’s highly efficient. So, the idea was: let’s use drones to get the big picture and then use smart computer vision algorithms to automatically find and locate the damage on these ancient walls.

Our study dives into this headfirst, proposing a two-phase method to tackle this exact problem – specifically focusing on the dreaded “brick missing” damage that’s weakening many of the Great Wall’s defensive forts.

Phase One: Spotting Trouble from Above

The first phase is all about detection. We need to find *where* the damage is. For this, we turned to object detection, which is basically teaching a computer to spot specific things (like missing bricks) in an image and draw a box around them. Traditional methods were a bit clunky, but the world of deep learning, especially using convolutional neural networks (CNNs), has changed the game.

We chose a type of CNN called YOLO (You Only Look Once). The name kind of says it all – it’s designed to be fast, spotting objects in a single pass. This speed is crucial for monitoring something as vast as the Great Wall. We picked YOLOv5n, the lightweight version, because speed was a priority for daily checks.

But here’s the challenge: drone images of the Great Wall often show the damage as tiny spots against a huge, complex background (trees, rocks, trails, you name it). The standard YOLOv5n isn’t always the best at picking out these small targets accurately. So, we got clever. We made some improvements to the network.

We added something called an “attention mechanism” (specifically, SEAttention). Think of it like giving the AI a pair of glasses that helps it focus better on the important parts of the image – the potential damage areas – rather than getting distracted by all the background noise. It assigns different weights to different parts of the image, making it pay more attention to the channels where the damage features are likely to be.

We also used a technique called “knowledge distillation.” This is pretty cool – we took a larger, more complex version of YOLOv5 (YOLOv5s), which is generally more accurate, and had it “teach” our smaller, faster YOLOv5n model. It’s like a master passing on its wisdom to an apprentice. This allowed our lightweight YOLOv5n to learn richer features and boost its accuracy significantly, getting closer to the performance of the bigger models, all while keeping its speedy nature.

To train this improved AI, we had to build a dataset. This involved flying drones over sections of the Great Wall, capturing high-resolution images of defensive forts, and then manually marking all the areas with missing bricks. We ended up with a dataset of 246 forts and 1197 images, specifically focused on this type of damage from a drone’s perspective. This dataset is a big deal because most existing ones focus on surface damage seen up close, not large-scale structural issues visible from above with complex backgrounds.

Phase Two: Pinpointing the Damage Exactly

Finding the damage is great, but for preservation work, you need to know *exactly* where it is and what its shape is. A simple box isn’t enough. That’s where phase two, localization, comes in. This phase uses image processing techniques to get precise details about the damage edges.

Once our Improved-YOLOv5n spots a damaged area and gives us a bounding box, we take that cropped image and put it through a series of steps. A key part is image segmentation – separating the damaged area from the rest of the wall and the background. Standard methods struggle with the complex backgrounds in our drone images.

So, we used a multi-threshold segmentation algorithm called OTSU, but we gave it a boost using a genetic algorithm. This helps the algorithm find the best multiple thresholds to split the image based on grayscale values, making it much better at separating the missing brick areas from the surrounding wall and background, even when things look messy.

After segmentation, we clean up the image using morphological operations (like ‘opening’ and ‘closing’) to get rid of noise and fill in gaps, resulting in a clear boundary. Then, we use an edge detection method (like the Canny operator) to find the exact coordinates of that boundary.

Drone images often have perspective distortion because the camera is looking down at an angle. To get the true shape and location of the damage on the wall’s plane, we use perspective transformation. We identify known points on the wall (like the corners of the fort) and use them to calculate a transformation matrix. This matrix allows us to correct the distortion and map the detected damage edges onto a flat, true-to-scale representation of the wall surface.

Because a single drone image might only show part of a large damaged area, we often need to combine information from multiple images. We developed an edge curve aggregation method that takes partial damage edges from different views and stitches them together to get the complete outline of the missing brick area on the wall plane. The goal is to get the largest possible area of damage outlined accurately.

Putting it to the Test: The Results Are In!

Okay, so how well did all this fancy tech actually work? We trained our Improved-YOLOv5n model and tested it on a separate set of images it hadn’t seen before. We measured its performance using standard metrics like Precision, Recall, and mean Average Precision (mAP@0.5). Precision tells us how many of the detected damages were actually real. Recall tells us how many of the real damages the algorithm managed to find. mAP@0.5 gives a good overall picture, balancing both.

Our Improved-YOLOv5n achieved a mAP@0.5 of 74.5%. That’s pretty solid! More importantly, it maintained a super fast detection speed, hitting 768.1 Frames Per Second (FPS). This speed is key for practical, large-scale monitoring.

We compared our improved model to other popular object detection algorithms like Faster R-CNN, standard YOLOv5 versions (s, x, n), and even YOLOv8s. Our Improved-YOLOv5n showed better overall performance, combining good accuracy with that crucial speed advantage. It did a great job spotting brick missing damage on test forts, even with varying levels of damage and those tricky complex backgrounds.

Of course, it wasn’t perfect. We saw some instances of omissions (missing some damage) and misdetections (mistaking something else, like collapsed debris, for missing bricks). This is often because the damage edges are really irregular, making the initial rectangular bounding box tricky to get just right, or because severely damaged areas can look similar to missing parts.

The localization phase also proved effective. We took examples of detected damage and ran them through our segmentation and localization process. It successfully outlined the irregular edges of the missing bricks on the wall’s plane. This precise location data is incredibly valuable because it can be fed directly into modeling software. Since the Great Wall forts often have a relatively simple, rectangular layout, knowing the exact shape and location of the missing parts allows us to build accurate 3D models of the damaged structure. This helps engineers understand the extent of the problem and plan repairs much more effectively.

Looking Ahead and Beyond the Wall

So, what’s the takeaway? We’ve shown that a computer vision-based approach using drones can provide a time-efficient, high-accuracy, and non-destructive way to assess the structural safety of ancient city walls like the Great Wall. It automates a task that was previously incredibly challenging.

There are definitely areas for improvement, though. Our dataset, while unique, could be larger and more diverse to improve the algorithm’s ability to generalize to different sections and types of damage. We need to work on reducing those omissions and misdetections, perhaps with even smarter algorithms or better ways to define the damage area.

Future work will involve collecting more data, especially from less-represented areas, and exploring more advanced AI techniques. We’re also thinking about incorporating 3D data from tools like LiDAR (which uses lasers to measure distances) to get a full 3D picture of the damage, not just a 2D outline.

But here’s the really exciting part: this technology isn’t limited to the Great Wall. The core methods – using drones for imaging, AI for detection, and image processing for localization – can be adapted to protect *other* types of architectural heritage. Imagine using this to find cracks in old timber buildings or assess damage on historical masonry structures elsewhere in the world. With enough data and some tweaking, this approach could be a game-changer for preserving historical sites globally.

Ultimately, it feels pretty good to know that we’re using cutting-edge technology to help protect these incredible links to the past. It’s a blend of history and high-tech, working together to keep these giants standing for generations to come.

Source: Springer