Hey, Let’s Talk 3D! My Adventures with Geometrically-Savvy Transformers for Point Clouds

Alright, gather ‘round, folks! Ever looked at those cool 3D scans or how self-driving cars “see” the world and wondered what’s going on under the hood? A lot of it boils down to something called point clouds. Imagine a bazillion tiny dots floating in space, each one marking a spot on an object’s surface. That’s a point cloud! They’re super useful for everything from autonomous driving and robotics to augmented reality and even checking out ancient artifacts. I’ve been diving deep into making sense of these 3D marvels, and let me tell you, it’s been quite a ride!

The Fuzzy, Dotty World of Point Clouds

Point clouds are amazing because they give us a real 3D picture of the world, capturing all those nitty-gritty geometric details and how objects relate to each other in space. This is gold for understanding a scene. But, (and it’s a big but!), they’re not the easiest things to work with. For starters, the points are unordered – there’s no “first” or “last” point. They can be pretty sparse, with big gaps, and often come with a fair bit of noise. Think of it like trying to assemble a puzzle where the pieces are just a cloud of dots, some missing, and some extra ones thrown in for good measure!

Traditional computer vision tools, like Convolutional Neural Networks (CNNs) that are champs with 2D images, kind of scratch their heads when they see point clouds. CNNs love neat, grid-like data, and point clouds are anything but. So, figuring out how to pull meaningful features out of this beautiful mess, deal with varying point densities, and handle massive clouds of points without our computers throwing a fit – these are the big challenges we’re tackling.

How We’ve Tried to Tame the Dots So Far

Researchers, myself included, have come up with some clever ways to handle point clouds using deep learning. Broadly, you can think of them in a few categories:

- Voxel-based methods: These try to turn the point cloud into a regular 3D grid (like 3D pixels, or “voxels”) and then use 3D convolutions. Think VoxelNet. The downside? You can lose some of those fine details, and it can get computationally heavy.

- Multi-view methods: These take 2D snapshots of the point cloud from different angles and then use good old 2D CNNs. MVCNN is an example. Smart, but sometimes they miss the full 3D picture.

- Point-based methods: These are the brave ones that tackle the raw point cloud data directly. This is where things get really interesting! PointNet was a game-changer here, directly processing points and using clever tricks to handle the unordered nature. Then came PointNet++, which got better at learning local features. DGCNN used dynamic graphs to capture local geometry. These were all awesome steps!

More recently, some really powerful models like KPConv and PointMLP have pushed accuracy and robustness even further, but they often come with a hefty number of parameters and complex calculations. Many of these rely on Multi-Layer Perceptrons (MLPs), which are great for smaller datasets but can struggle to grasp complex geometric structures and global features in massive point clouds. Plus, they can become slow and memory-hungry.

Early on, we mostly just fed the 3D coordinates (x, y, z) into these models. But then we realized, “Hey, there’s more to geometry than just coordinates!” So, folks started adding richer info like normal vectors (which way a surface is facing), curvature, and how points relate to their neighbors. Models like GBN and EIP did this, giving the network more prior knowledge. But, you guessed it, this often meant more computation.

Enter the Transformer: A New Hope (With a Catch)

Then, Transformers came along and shook up the AI world, especially in natural language processing. We thought, “Can we use these for point clouds?” And yes, we can! Transformers use something called self-attention to figure out how different parts of the input relate to each other, even if they’re far apart. This is super handy for point clouds, which don’t have a natural order. Models like PCT and Point Transformer started using this to fuse local and global info, and it worked pretty well! Point-BERT even borrowed ideas from language models for self-supervised pretraining. Stratified Transformer and SAT3D also brought cool ideas to the table for efficiency and precision.

But here’s the catch with standard Transformers: that self-attention mechanism, while powerful, needs to compare every single point with every other point. If you have N points, the computation goes up by N-squared (O(N2)). For large point clouds, this can be painfully slow and resource-intensive, making real-time applications a tough nut to crack.

My Brainchild: PointGA – Lean, Mean, and Geometrically Keen!

This got me thinking. What if we could build a Transformer that’s not just smart about long-range dependencies, but also deeply understands geometry, and does it all efficiently? And that’s how PointGA (Point Geometric-Awareness) was born! I wanted something lightweight but powerful.

Here’s the secret sauce, broken down:

- Geometric Information Boost (Parameter-Free!): Inspired by some of the earlier work, I first expanded the raw 3D coordinates. We’re talking about calculating things like direction vectors between nearby points, normal vectors (which way a tiny patch of surface is facing), and even local curvature. This gives the network a richer understanding of the local geometry right from the start, with minimal extra computational cost because it’s a parameter-free step.

- Trigonometric Position Encoding (Fancy Math for Spatial Smarts): Since points are just floating in space, we need to give the model a good sense of their position. I designed a new trigonometric position encoding module. It uses sine and cosine functions (remember those from math class?) to map point coordinates into a higher-dimensional space. The beauty of trig functions is their periodic and continuous nature, which helps capture spatial structures more effectively. It’s like giving each point a unique, rich address that also tells you about its neighborhood.

- Positional Differential Self-Attention (PDA – The Efficiency Whiz): This is where we really tackle that O(N2) problem. Instead of the standard self-attention, I developed a positional difference self-attention (PDA) mechanism. The key idea is to compute attention based on the element-wise subtraction between point features, rather than the more complex dot-product similarity. This brings the complexity down to linear time (O(N))! So, it’s much faster and more scalable, especially for large point clouds, while still being great at refining features.

So, PointGA is all about combining these three ideas: beefing up the input with more geometric info, using smart positional encoding, and then applying an efficient self-attention mechanism to learn the relationships. It’s a simple framework, but as you’ll see, it’s pretty effective!

The Nitty-Gritty: How PointGA Works Its Magic

Let’s peek a bit more under the hood. When PointGA gets a point cloud, it first goes through the geometric information expansion module. For each point, we look at its neighbors to calculate those direction vectors, normal vectors, distances, and curvature. This enriched data then feeds into the network.

Next up is the trigonometric position encoding. We take the 3D coordinates, sample some local center points (using Furthest Point Sampling, or FPS), find their k-nearest neighbors (KNN), and then encode their positions using those sine and cosine functions at different frequencies. This helps the model understand relative positions across different scales. This encoded positional info gets combined with other features.

Then, we do some initial feature extraction using pooling layers (both max and average pooling to capture salient features and global context). Finally, the Positional Differential Self-Attention (PDA) mechanism kicks in. It takes the features and, instead of the usual heavy Q, K, V dot products for attention weights, it uses element-wise subtraction. This is much lighter computationally but still effectively models how points relate to each other based on their feature differences, emphasizing relative positions and enhancing local structure perception.

For tasks like classification, this process is repeated a few times, downsampling and aggregating features, until we get a final representation. For semantic segmentation (where we label every point in the cloud), we have a slightly different setup with an encoder-decoder like structure to get per-point predictions, but the core ideas of geometric awareness and efficient attention remain.

Putting PointGA to the Test: Did It Work?

Okay, so I had this cool-sounding model. But the proof is in the pudding, right? I needed to see how it stacked up against other methods. So, I threw PointGA at some standard (and some really tough!) benchmark datasets.

Classification: Telling a Chair from a Table

For point cloud classification, I used two main datasets:

- ScanObjectNN (PB_T50_RS variant): This one’s a beast! It’s made from real-world 3D scans, so it has all the lovely noise, missing parts, and occlusions you’d expect in reality. It’s a great test of robustness.

- ModelNet40: This is a classic, cleaner dataset of CAD models across 40 categories. Good for checking fundamental shape understanding.

On ScanObjectNN, PointGA achieved an overall accuracy of 87.6%! This was better than many existing methods, including classics like PointNet and PointNet++, and even some more recent Transformer-based models. What’s really neat is that PointGA did this using only 1024 points per object and without needing normal vectors as input, while some others used way more points or extra info. And get this: PointGA has only 1.73M parameters. That’s tiny compared to monsters like DGCNN (21.0M) or DualMLP (14.3M)! So, it’s not just accurate, it’s efficient.

On ModelNet40, PointGA also did really well, hitting 93.8% overall accuracy. Again, this was competitive with or better than many state-of-the-art methods, often with significantly fewer parameters. It shows that the geometric smarts and efficient attention really pay off.

I even did a fun little experiment: I took a point cloud object, fed it through PointGA to get its feature representation, and then searched for the most similar objects in the feature space. The results were pretty cool! Even if the retrieved shapes had some different details (like chair legs or airplane wings), the overall shape and meaning were spot on. This tells me PointGA is learning strong, meaningful features.

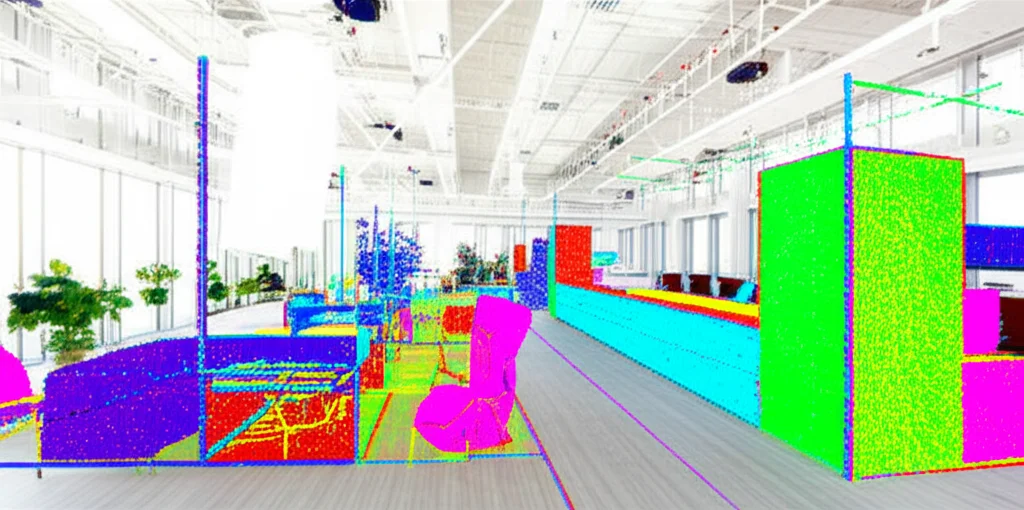

Semantic Segmentation: Painting the Town (or Room) by Numbers

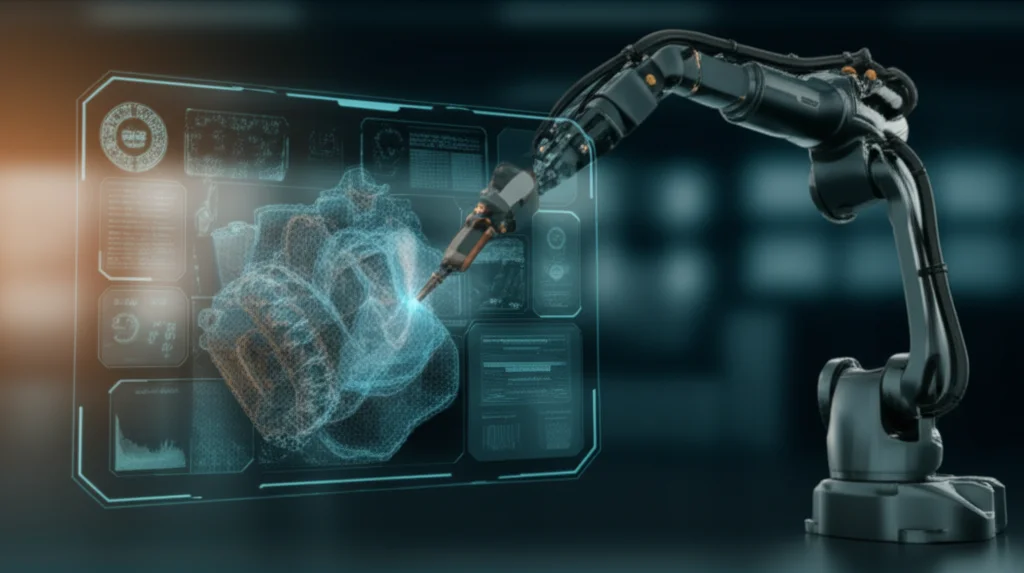

Next up was semantic segmentation, using the S3DIS dataset (Area 5 for testing). This dataset has 3D scans of indoor spaces, and the goal is to label every point with its category (wall, floor, chair, table, etc.). This is a much more detailed task.

PointGA achieved a mean Intersection over Union (mIoU) of 66.2% and a mean accuracy (mAcc) of 74.4%. This outperformed many existing methods in mIoU and mAcc, showing it has a good balanced performance across different object categories. It particularly shone on complex objects like doors, tables, sofas, and bookcases. The geometric information expansion helps distinguish between things that look similar but are semantically different, and the trigonometric encoding helps define complex boundaries. Even for tricky, irregular “clutter” categories, PointGA did better than many others.

Visually comparing PointGA’s segmentation results with another Transformer model (PCT), it was clear that PointGA was doing a better job at capturing local details and overall structure, getting closer to the ground truth. That geometric awareness really makes a difference!

Why Does PointGA Tick? A Look Inside (Ablation Studies)

To really understand what makes PointGA work, I did a bunch of “ablation studies.” That’s a fancy way of saying I took parts out or changed parameters to see what happened. These were mostly done on the ScanObjectNN classification task.

- How many neighbors (k) are enough? For the KNN step, I found that k=40 was the sweet spot. Too few, and you don’t get enough local info. Too many, and you get noise and extra computation.

- Do those extra geometric features really help? Oh yes! Starting with just 3D coordinates gave an overall accuracy of 86.9%. Adding direction vectors, then normal vectors and curvature, and finally distances, pushed it up to 87.6%. Each piece of geometric prior knowledge helped! (Interestingly, adding raw neighbor coordinates too early actually hurt, probably because it smoothed out the unique features of each point too soon).

- What about the attention mechanism? I tried removing attention altogether (just MLPs), using standard scalar attention, vector attention, and the PCT self-attention. My proposed Positional Differential Self-Attention (PDA) gave the best accuracy (87.6%) with a good balance of computational cost (1.97G FLOPs, 27.4ms inference). The MLP-only version was fastest but least accurate. Traditional attentions were more expensive.

- Tuning the trig encoding (alpha): That frequency scaling factor, alpha, in the trigonometric encoding matters. I found alpha=1000 worked best. Too small, and you get high-frequency wiggles that mess up local smoothness. Too large, and different positions get mapped to similar embeddings, reducing distinctiveness.

- Pooling strategy: Combining max pooling (captures salient features) and average pooling (retains global context) worked better than either one alone.

Real-World Smarts and Where We Go Next

It’s interesting to see how performance can vary. PointGA did great on ModelNet40 (clean CAD models) and S3DIS (real-world indoor scans). The differences often come down to dataset properties. ModelNet40 is great for learning pure shapes. S3DIS has noise, occlusions, and varying densities, which is tougher but more realistic. The types of objects also matter – S3DIS has categories with overlapping shapes (like tables and chairs) which makes it harder than the more distinct categories in ModelNet40.

While PointGA has shown some really promising results on these benchmarks, the real world is always messier. Point clouds can have wild variations in density, noise, and complexity. So, for future work, I’m thinking about how to make it even more robust. Maybe more adaptive feature aggregation techniques that can handle these variations better. Refining how we represent boundaries is also super important for things like autonomous driving, where knowing exactly where an object ends is critical.

Wrapping It Up: PointGA’s Promise

So, there you have it – PointGA! It’s my take on a Transformer-based model that’s designed to be efficient and really understand the geometry and structure in point cloud data. By expanding geometric information, using smart trigonometric positional encoding, and developing that nifty positional difference attention mechanism, PointGA manages to deliver strong performance on tough classification and segmentation tasks, all while keeping computational costs relatively low.

It’s been a fascinating journey, and I believe PointGA lays a solid foundation. There’s always more to do, like making it even better at handling the wildness of real-world data and capturing super fine-grained details. But for now, I’m pretty excited about its potential for large-scale scene understanding and real-time 3D perception. It’s all about making our machines see and understand the 3D world a little bit better, one point cloud at a time!

Thanks for sticking with me through this deep dive. Hopefully, you’ve got a better sense of the cool challenges and innovations happening in the world of 3D point cloud analysis!

Source: Springer