DLRN: Unlocking Chemical Reaction Secrets with Deep Learning

Hey everyone, let’s chat about something pretty cool happening at the intersection of chemistry, biology, physics, and artificial intelligence. We’ve all seen how chemical reactions do their thing, changing over time. But figuring out exactly how they happen, step-by-step, especially when things are happening super fast or involve many different players? That’s a whole different ballgame.

Scientists often use time-resolved techniques – think fancy cameras or sensors that capture changes over tiny fractions of a second – to get data on these dynamic systems. This gives us mountains of data, showing how signals change over time. To make sense of it all, we need models. We combine what we think is happening (our experimental hypothesis) with mathematical and physical models to get a concise picture of the system’s dynamics. This helps us pull out crucial details like the reaction pathways, how fast things are happening (the ‘time constants’), and how much of each molecule or state is present at different times (the ‘species amplitudes’).

The Old Way: A Bit of a Puzzle

Now, traditionally, getting to that final kinetic model has been… well, it can be a bit of a puzzle. It involves a bunch of intermediate steps, testing different ideas and models across lots of experiments. If the system is really complex, some of the intermediate states might be invisible or ‘hidden’, making things even trickier. This approach demands a lot of expertise – you need to be a bit of a data wizard and a modeling guru. And honestly, making a wrong turn at any point during the analysis can lead you down a garden path to an incorrect model and inaccurate results.

Methods like Global Analysis (GA) give us a functional description and minimum time constants, which is a great start, but it’s just that – a start. To get the real mechanistic details, you usually move to Global Target Analysis (GTA). GTA is powerful because it links the data directly to a kinetic model using differential equations. But here’s the catch: you have to propose and test kinetic models based on existing knowledge and assumptions. This can mean trying out a whole family of models, and sometimes several models might seem to fit the data equally well, leaving you scratching your head trying to pick the ‘best’ one. This process gets exponentially harder the more complex your system is.

Enter DLRN: Our Deep Learning Hero

But what if there was a smarter, faster way to cut through this complexity? That’s where our new kid on the block comes in: the Deep Learning Reaction Network, or DLRN. We designed DLRN as a deep learning-based framework specifically to tackle time-resolved data. Our goal was to create something that could rapidly give us the kinetic reaction network, the time constants, and the amplitudes for a system, performing comparably or even better than classical fitting analysis, and doing it automatically.

We built DLRN using a sophisticated neural network architecture, specifically based on an Inception-Resnet design. We added personalized features and blocks – think of them as specialized brains – for analyzing the Time, Amplitude, and Model information from the data. The idea is simple: you feed DLRN your 2D time-resolved data set (like a spectrum changing over time), and it processes this information through its different blocks.

First, the ‘Model’ block gets to work. It looks at the data and predicts the most probable kinetic model out of a large set of possibilities (we trained it on 102 different potential models!). It gives you this prediction with a confidence score. Once the model is identified, this information is passed to the ‘Time’ and ‘Amplitude’ blocks. These blocks then extrapolate the specific values for the time constants associated with each step in the predicted model and the shapes (or ‘spectra’) of the different species involved.

DLRN’s Capabilities: More Than Just Simple Cases

One of the things we’re really excited about is DLRN’s versatility. We tested its utility on datasets with:

- Multiple timescales: Even if a reaction unfolds over nanoseconds, microseconds, and milliseconds, DLRN can analyze each time window individually to reconstruct the full, complex dynamics.

- Complex kinetics: It can handle intricate reaction pathways.

- Different 2D systems: We showed it works not just for time-resolved spectra (like from photoluminescence or transient absorption) but also for things like agarose gel electrophoresis data, which tracks molecular changes over time.

- Hidden initial states: Surprisingly, DLRN can even analyze scenarios where the initial state involved in the reaction isn’t directly visible or emitting a signal – a ‘dark state’. It can figure out the correct model and parameters even then.

We put DLRN through extensive testing, including on a massive evaluation batch of 100,000 synthetic datasets. The results were pretty compelling. For predicting the correct kinetic model, DLRN achieved high accuracy, getting the exact match (Top 1) over 83% of the time, and having the correct model within its top three predictions (Top 3) over 98% of the time. For predicting time constants and amplitudes, it also showed high accuracy, often predicting values within 10-20% error of the expected values.

Putting DLRN Head-to-Head with the Classics

Of course, we had to see how DLRN stacked up against the established methods like classical fitting analysis and tools like KiMoPack. We used a complex dataset for this comparison. The big difference right away? With classical methods, you have to *tell* the software which kinetic model to fit. DLRN figures out the model *itself*.

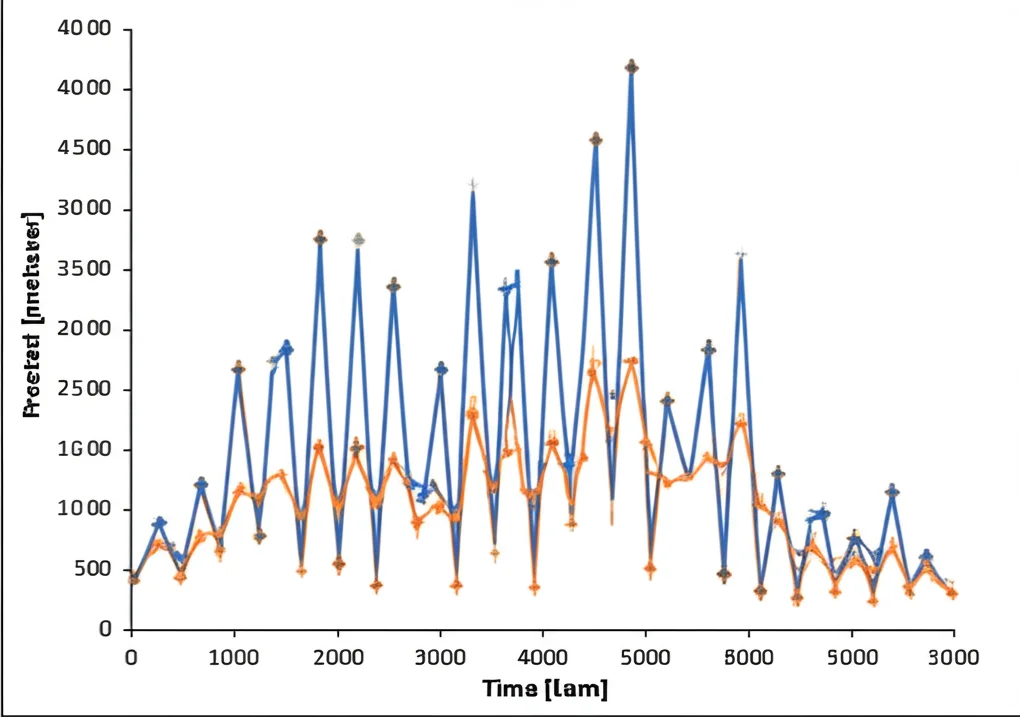

When we compared the results using the *correct* model for the classical methods, DLRN still held its own. It identified the correct model with very high confidence (nearly 99%) and predicted time constants and amplitudes that matched the expected values well. Classical fitting and KiMoPack, while sometimes giving slightly better residual scores (meaning the fit looked a tiny bit smoother), occasionally showed mismatches in the predicted parameters, suggesting they might have found a ‘local minimum’ rather than the true solution. DLRN, on the other hand, consistently found the correct minimum solution and extrapolated all parameters automatically.

Real-World Applications: NV Centers and DNA Dynamics

Synthetic data is great for training, but the real test is experimental data. We used DLRN to analyze data from two very different systems: Nitrogen-vacancy (NV) centers in diamond and DNA strand displacement (DSD) networks.

NV centers are interesting quantum systems. We looked at their emission dynamics using time-resolved photoluminescence and a more complex branching mechanism using transient absorption spectroscopy. In both cases, DLRN correctly predicted the kinetic models and extracted time constants and amplitudes that were in line with previously reported results. It handled both a simple decay and a more complex branching pathway beautifully.

DNA strand displacement (DSD) networks are fascinating because they use DNA base-pairing rules to create complex reaction networks, kind of like tiny biological computers. Their kinetics can be tricky, especially when other factors like enzymes or dyes are involved. We used DLRN to analyze DSD systems with different complexities, including simple linear pathways and more complex branching ones. DLRN was able to correctly identify the networks, predict the spectral amplitudes, and reconstruct the kinetic traces. Interestingly, in some DSD cases, DLRN’s predicted time constants differed slightly from simple theoretical calculations, and further analysis suggested this might be because the real reaction kinetics were more complex (closer to second-order) than the simple pseudo-first-order assumption used in the comparison. This highlights DLRN’s ability to reveal nuances in the data.

Making it Accessible: The G-User-DLRN Interface

We didn’t just want to build a powerful tool; we wanted people to be able to use it easily. So, we developed a Python-based graphical user interface (GUI) called G-User-DLRN. This interface lets users load their preprocessed time-resolved data (spectra or gel images), select the analysis type, and with a click of a button, get the DLRN predictions – the kinetic model, time constants, amplitudes, and even residuals to see how well the prediction fits the original data. It can even show the top 1 or top 3 most probable models predicted by the network, along with their confidence scores.

Limitations and What’s Next

Now, is DLRN perfect? Not yet! Like any new tool, it has its current limits. Right now, DLRN is primarily designed for analyzing first or pseudo-first order kinetic reactions. Tackling higher-order reactions (where the rate depends on the concentration of more than one molecule) is a significant challenge. Including these would drastically increase the number of possible kinetic models the network needs to consider, requiring substantial architectural changes and a much larger training dataset. Also, while DLRN’s predictions are accurate, the residuals (the difference between the original data and the DLRN fit) could sometimes be improved compared to classical fitting, although as we saw, better residuals don’t always mean more accurate parameters.

These limitations open up exciting avenues for future work. Expanding DLRN to handle higher-order reactions would be a major step, bringing deep learning to bear on an even wider range of complex, non-linear systems. Improving the residual fit is also something we’re looking at.

Wrapping Up

So, what we’ve got here is a powerful new tool in the arsenal for anyone working with time-resolved data and trying to understand the intricate dance of chemical reactions. DLRN offers a fast, automated, and accurate way to extract kinetic models, time constants, and species amplitudes, often performing as well as or better than traditional methods, especially in identifying the correct underlying mechanism without needing prior model assumptions. We believe this framework is a significant step forward in making complex kinetic analysis more accessible and efficient, paving the way for deeper insights into dynamic systems across various scientific fields.

Source: Springer