Hey, Let’s Talk Seeds! Spotting Naughty Coatings with Super Smart AI

Alright, gather ‘round, folks, because I want to chat about something that might sound a bit niche but is actually super important for our food supply: sugar beet seeds! And more specifically, how we make sure these tiny powerhouses are in tip-top shape before they even hit the soil. You see, there’s this cool thing called seed coating, and it’s a game-changer in agriculture. But, like anything, it needs to be done right. That’s where some seriously clever tech, powered by deep learning, steps in. Intrigued? You should be!

So, What’s the Big Deal with Seed Coating?

Imagine you’re a tiny sugar beet seed. The world can be a tough place! Pests, diseases, rough handling – it’s a lot. Seed coating is like giving these seeds a little superhero suit. We’re talking about applying a layer of beneficial stuff – maybe fungicides, insecticides, nutrients, or even polymers – right onto the seed’s surface. This isn’t just for show; it’s serious business. A good coating can:

- Boost germination rates (more seeds sprouting, yay!).

- Protect against nasty pests and diseases.

- Make seeds easier to handle and plant, especially with modern machinery.

- Improve overall crop performance and, ultimately, yield.

The global seed coating market is booming, expected to jump from about USD 2.0 billion in 2023 to a whopping USD 3.1 billion by 2028. That’s an 8.5% growth spurt each year! This isn’t surprising when you think about the push for better seed quality, higher crop yields, and more sustainable farming practices. It’s all about giving our crops the best possible start in life.

For sugar beets, which are a massive source of sugar worldwide, the quality of the seed is paramount. Coating helps standardize the seed’s shape and size, making it rounder and heavier, which is perfect for mechanical planters. Think of it as making these irregular little seeds into perfect little spheres that machines can handle with precision. Clay is a common coating material, mixed with water and adhesives that, crucially, don’t stop the seed from germinating once it’s in moist soil.

When Good Coats Go Bad: The Problem of Defects

Now, while seed coating is fantastic, it’s not always perfect. Sometimes, things can go a bit wonky. We can end up with:

- Broken coatings: The protective layer is cracked or chipped. This can reduce the effectiveness of any protective chemicals and make the seed vulnerable.

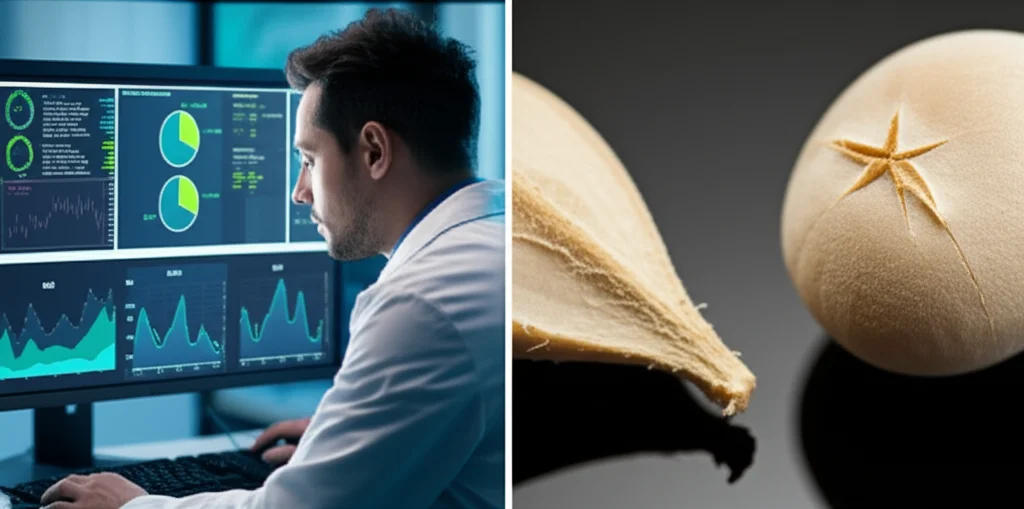

- Star-shaped coatings: These are irregular, often with pointy bits. Not only do they look odd, but they can cause problems in planting machinery, like blocking holes in pneumatic planters or even damaging other seeds.

- Adherent coatings (clumps): This is when seeds stick together, or a single seed gets way too much coating. The goal is one healthy plant per seed, so clumps mean wasted seeds and potential overcrowding.

- Improperly sized coatings: Too much coating can delay germination or even prevent it by stopping moisture from reaching the seed.

These defects aren’t just cosmetic. They can seriously impact seed quality, germination rates, and how evenly plants emerge in the field. This means more costs for farmers and potentially lower yields. Nobody wants that! So, identifying and classifying these defects quickly and accurately is a big deal.

The quality of the coating depends on a whole bunch of factors: the coating material itself, the adhesive used, the original seed size, the target coated size, the coating machine’s features (like drum speed), and even the operator’s experience. It’s a delicate dance of science and skill!

Enter YOLO: The AI Detective for Seed Coats!

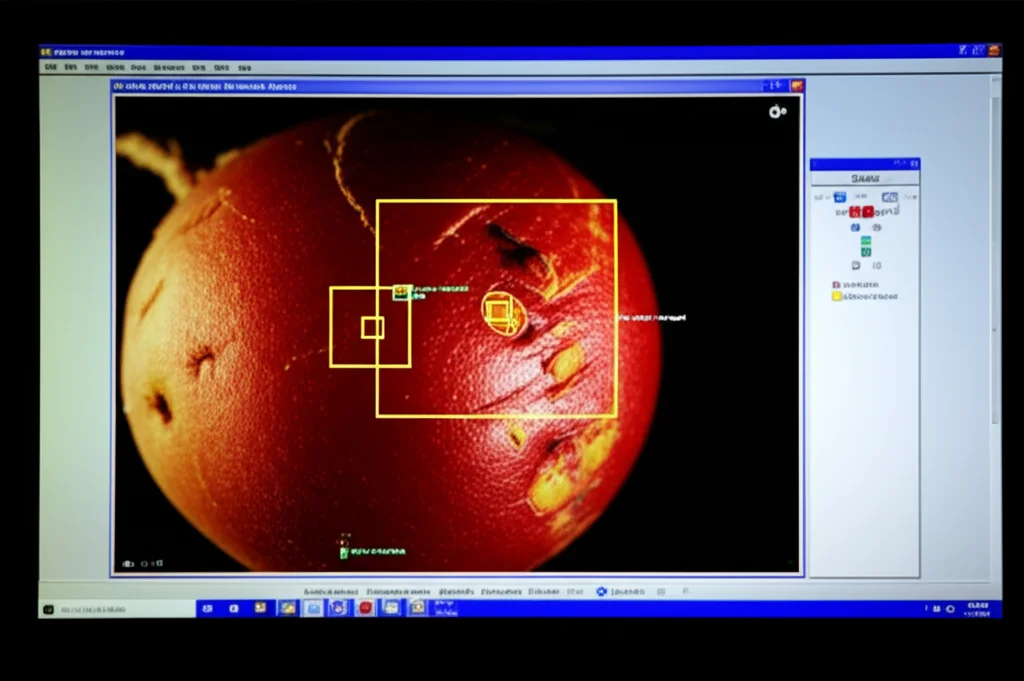

So, how do we spot these pesky defects efficiently? Manually checking thousands, or even millions, of tiny seeds is, well, a bit of a nightmare. It’s slow, tedious, and prone to human error. This is where the magic of deep learning comes in, and specifically an algorithm called YOLO (You Only Look Once). If you’re not familiar, YOLO is a super-fast and accurate object detection algorithm. It can look at an image and, in a flash, identify and classify different objects within it. Think of it as a highly trained detective that can spot a “broken coating” in a crowd of “normal coatings” almost instantly.

Our goal in a recent study was to see if we could use YOLO to categorize coated sugar beet seeds based on these coating defects. We wanted to build a system that could enhance seed quality control swiftly and effectively. And guess what? The results were pretty exciting!

Setting the Stage: How We Trained Our AI

To teach our AI, we first needed a good dataset. We took 2000 high-resolution (3000×4000 pixels!) RGB images of coated sugar beet seeds. These weren’t just any snaps; they were taken in a special “shooting box” with a top-side open, under constant 1150 lx daylight conditions. This controlled environment helps ensure the images are consistent, which is crucial for training an AI. We even rotated each seed every 90 degrees to capture it from all angles – a full 360° view! This helps the model learn to recognize defects no matter how the seed is oriented.

The seeds were classified into four categories:

- Normal coating: The good guys, perfectly coated.

- Broken coating: Seeds with cracks or chips.

- Star-shaped coating: Those irregular, pointy ones.

- Adherent coating: Clumped seeds or those with excessive coating.

We used 80% of these images for training our AI models and kept 20% aside for validation (testing how well it learned). We specifically experimented with three versions of the latest YOLO algorithm: YOLOv10-N (Nano), YOLOv10-L (Large), and YOLOv10-X (Extra-Large). These versions vary in size and complexity, offering different trade-offs between speed and accuracy.

Why YOLOv10? Well, the YOLO family of algorithms is known for its rapid development and excellent performance in real-time applications. We’re always looking for the cutting edge, and YOLOv10 represents that. Our hypothesis was that we could achieve high success rates and speeds with this newer algorithm, even with high-resolution images.

The criteria for our classification were directly linked to real-world farming needs:

- Criterion 1: Single embryo in each grain. Clumping (adherent coating) means more than one seed, which increases costs.

- Criterion 2: Homogeneous diameter and germination. Oversized coatings can delay or prevent germination.

- Criterion 3: Chemical protection. Broken coatings can reduce the effectiveness of protective treatments.

- Criterion 4: Planting evenness. Star-shaped coatings can mess with planting machines and lead to gaps in the field.

A Peek Under the Hood: How YOLOv10 Works Its Magic

Now, for a little bit of the techy stuff (I’ll keep it light, I promise!). YOLOv10 has some clever tricks up its sleeve. One of the big improvements is its NMS-free training. NMS (Non-Maximum Suppression) is a step traditionally used to get rid of redundant detections, but it can add delays. YOLOv10 uses a dual-label assignment strategy, combining one-to-many and one-to-one matching. During training, both help the model learn robustly. But for inference (when it’s actually doing the detecting), it can use just the one-to-one head, which means no NMS needed! This makes it more efficient.

It also has a lightweight architecture for its detection head. The part of the AI that actually says “this is a broken coating” is designed to be less computationally heavy without sacrificing performance. They’ve also been smart about how the model processes information, using things like pointwise convolutions followed by depthwise convolutions for downsampling. This reduces computational cost and parameter count while retaining more information – pretty neat for improving speed and accuracy.

YOLOv10 also uses something called a Compact Inverted Block (CIB) structure. This is all about making the model more efficient by identifying and reducing redundancy in how data is processed, especially in deeper layers of the network. It’s like making sure every part of the AI brain is working as efficiently as possible.

And to boost accuracy without adding too much computational baggage, YOLOv10 selectively uses large-kernel convolutions (to see a wider area of the image) and an effective Partial Self-Attention (PSA) module. PSA helps the model understand the global context of an image – how different parts relate to each other – which can be super helpful for distinguishing subtle defects. It does this by dividing features, processing one part with self-attention (which is great for global understanding but can be computationally intensive), and then merging it back. By placing PSA strategically after later stages where the image resolution is lower, it gets the benefits without a huge computational hit.

All these architectural innovations mean YOLOv10 can come in various sizes – from the tiny YOLOv10-N for resource-constrained environments to the powerhouse YOLOv10-X for maximum accuracy – offering a great toolkit for different needs.

The Results Are In! How Did Our AI Do?

So, after all that training, how did our YOLOv10 models perform? Pretty darn well, I’m happy to report!

Let’s talk accuracy first. The YOLOv10-X model was the star performer here:

- Normal coating: 93% accuracy

- Broken coating: 94% accuracy

- Star-shaped coating: 94% accuracy

- Adherent coating: 95% accuracy

The YOLOv10-L model was also very strong, with accuracies mostly in the 92-94% range. Even the smallest model, YOLOv10-N, did commendably, with accuracies like 89% for normal, 85% for broken, 93% for star-shaped, and 92% for adherent coatings.

Now, what about speed? This is where the smaller models shine. The YOLOv10-N model was the speed demon:

- Normal coating: 11.5 milliseconds (ms) per detection

- Broken coating: 11.7 ms

- Star-shaped coating: 11.4 ms

- Adherent coating: 11.9 ms

To put that in perspective, there are 1000 milliseconds in a second. So, YOLOv10-N is making these decisions incredibly fast! The larger models, YOLOv10-L and YOLOv10-X, were a bit slower, with inference times generally in the 17-19 ms range. This is the classic trade-off: the most accurate models are often a bit more computationally intensive, and thus a tad slower.

We also looked at things like precision, recall, and F1 scores, which are other ways to measure how good the model is. The confusion matrices (which show where the model gets confused – e.g., mistaking a broken coating for a normal one) helped us understand its performance in detail. Overall, the results showed that these YOLOv10 models are very capable of accurately and quickly identifying different types of sugar beet seed coating defects.

What Makes This Special? And What’s Next?

You might be wondering if others have done similar things. While there’s research on detecting seed issues, our work on advanced classification of coated sugar beet seeds using the very latest YOLOv10 algorithm is quite novel. Many previous studies might have used older algorithms or focused on different types of seeds or fewer defect categories. For example, one study on coated red clover seeds using YOLOv5s reported high accuracies for categories like “qualified,” “seedy,” and “broken.” Our study dived deeper with four distinct defect types for sugar beet seeds, which have their own unique characteristics.

The use of high-resolution images is also a key point. More pixels mean more detail, which helps the AI better discern subtle differences in shape, color, and texture that define a defect. While some research uses public databases, these can sometimes be outdated or have lower-quality images. By creating our own original, high-resolution database, we could really push the algorithms and get a clearer picture of their capabilities.

This research is really the first step. We’ve shown that the AI models work well in a controlled lab environment. The next stage would be to think about how to integrate this into a real-world production line, perhaps in a fully enclosed system with even more optimized lighting. Imagine a machine that automatically sorts seeds, guided by this AI, ensuring only the best ones make it through!

There’s always room for improvement, of course. Future work could involve:

- Training models on even more diverse samples, considering different sugar beet varieties or coating formulations.

- Investigating the impact of different lighting conditions and camera angles to make the system even more robust.

- Collaborating with seed processing companies to tailor the technology to their specific needs.

The beauty of deep learning algorithms like YOLO is that they are constantly evolving. What we’ve achieved with YOLOv10 is a big step, and future versions will likely bring even more power and efficiency. It’s a fast-moving field!

Why This Matters for All of Us

Okay, so we can detect dodgy seed coatings with AI. Cool. But why should you care? Well, it all comes back to the quality and efficiency of our food production.

Better seed quality means:

- Higher crop yields: More food from the same amount of land.

- Reduced waste: Fewer seeds failing to germinate or produce healthy plants.

- Lower costs for farmers: More efficient planting and less need for replanting or dealing with patchy fields.

- More sustainable agriculture: Optimizing seed performance can contribute to using resources more effectively.

Seed coating itself is a delicate art. The formulation, the application method, the environmental conditions in the coating facility – they all play a role. Even slight changes in temperature can affect how materials flow or adhere. If errors aren’t caught early, it can lead to significant losses. Image processing technologies, like the one we’ve explored, can act as an ever-vigilant quality control inspector, catching problems before they escalate.

Think about it: if a batch of seeds has a high percentage of, say, star-shaped coatings, a system like this could flag it immediately. This allows operators to investigate and fix the issue in the coating process, whether it’s an adjustment to the machine, the material dosage, or something else. This proactive approach is far better than discovering problems way down the line when seeds are already packaged or, worse, planted.

It’s also important to remember that different seeds have different characteristics. A model trained for sugar beet seeds might not work perfectly for, say, corn or soybean seeds without retraining or adaptation. So, there’s a need to develop specific models for different seed varieties and their unique coating challenges.

The Bigger Picture: AI in Agriculture

This work is just one example of how AI and deep learning are revolutionizing agriculture. From precision irrigation and pest detection in the field to quality control in processing facilities, AI is helping us make farming smarter, more efficient, and more sustainable. It’s not about replacing human expertise but augmenting it, giving farmers and producers powerful new tools.

The ability to rapidly and accurately assess something as fundamental as seed coating quality can have a ripple effect throughout the agricultural value chain. It helps ensure that the very foundation of our crops is as strong as it can be. And when farmers start with high-quality seeds, they’re set up for a much better chance of success, which ultimately benefits all of us who rely on the food they grow.

So, the next time you enjoy something sweet, remember the humble sugar beet, and the incredible journey its seed might have taken – possibly even scrutinized by a super-smart AI like YOLO to make sure its little coat was just perfect! It’s a fascinating blend of nature and cutting-edge technology, all working together to feed the world. And I, for one, think that’s pretty amazing.

Ultimately, by paying close attention to details like seed coating and leveraging advanced technologies to get it right, we’re not just improving crop yields; we’re contributing to a more resilient and efficient agricultural system for generations to come. And that’s a sweet deal for everyone!

Source: Springer