Can an AI Voice Really Touch Your Brain? What Science Says About Familiar Sounds

Hey There, Let’s Talk About Voices!

You know how AI voices are getting spooky good these days? Like, *really* good. It’s not just Siri telling you the weather anymore; we’re talking about AI that can mimic specific people. Pretty neat, right? But it also brings up some interesting questions. We’ve all heard the cautionary tales about voice cloning scams – fraudsters using AI to sound like someone you trust. That’s the scary side.

But what about the *good* side? Could these incredibly realistic AI voices be used for something positive? Like, maybe for emotional support or making technology feel a bit more human? Think about companion robots for the elderly, or mental health apps. Voice is a huge part of human connection, right? We recognize loved ones by their voice instantly. So, if AI can replicate that, what does it *do* to us? Especially when it sounds like someone really familiar, like… well, like your mom?

Turns out, that’s exactly what some clever folks wanted to find out. They decided to dive deep and see if an AI-synthesized voice of a loved one could actually trigger something real in our brains. Not just a “oh, that sounds like…” moment, but actual neural responses. And they used some cool tech to do it.

Peeking Inside Your Head with fNIRS

To figure this out, they needed a way to see what the brain was doing while people listened to these AI voices. Forget bulky MRI machines for a moment; they used something called fNIRS. Don’t let the fancy name scare you! Functional Near-infrared Spectroscopy (fNIRS) is basically a non-invasive way to measure brain activity by looking at how much oxygen is in the blood in different parts of your brain. When a brain area is working harder, it needs more oxygen, and fNIRS can pick up on those changes. It’s quiet, portable, and perfect for studies involving sound, unlike noisy fMRI.

They focused on two key areas of the brain: the prefrontal cortex and the temporal cortex. Why these two? Well, the prefrontal cortex is kind of like the brain’s command center for things like cognitive control and emotional regulation. The temporal cortex, on the other hand, is heavily involved in processing language and, importantly, emotional memory. The idea was, if a voice is familiar and potentially emotional, these areas should show some action.

Their hypothesis was pretty straightforward: hearing an AI voice that sounds like a loved one (specifically, a mother) would cause a stronger neural response in these brain regions compared to hearing unfamiliar AI voices. And maybe, just maybe, that response would be tied to emotional reactions or memories.

The Setup: AI Moms and Brain Waves

So, how did they run this experiment? First off, they needed those AI voices. They used a model called GPT-SoVITS, which is pretty good at cloning voices from just a small sample. They collected audio samples from the participants’ mothers. Yes, their actual moms! Then, they used the AI to synthesize the mother’s voice reading a specific text – a letter from a mother to her son, which feels pretty fitting, right? They also synthesized two other voices using publicly available samples: a standard middle-aged woman’s voice and a sweet, younger female voice. This gave them the ‘familiar’ voice (AI mom) and ‘unfamiliar’ voices (AI middle-aged woman, AI sweet voice) to compare.

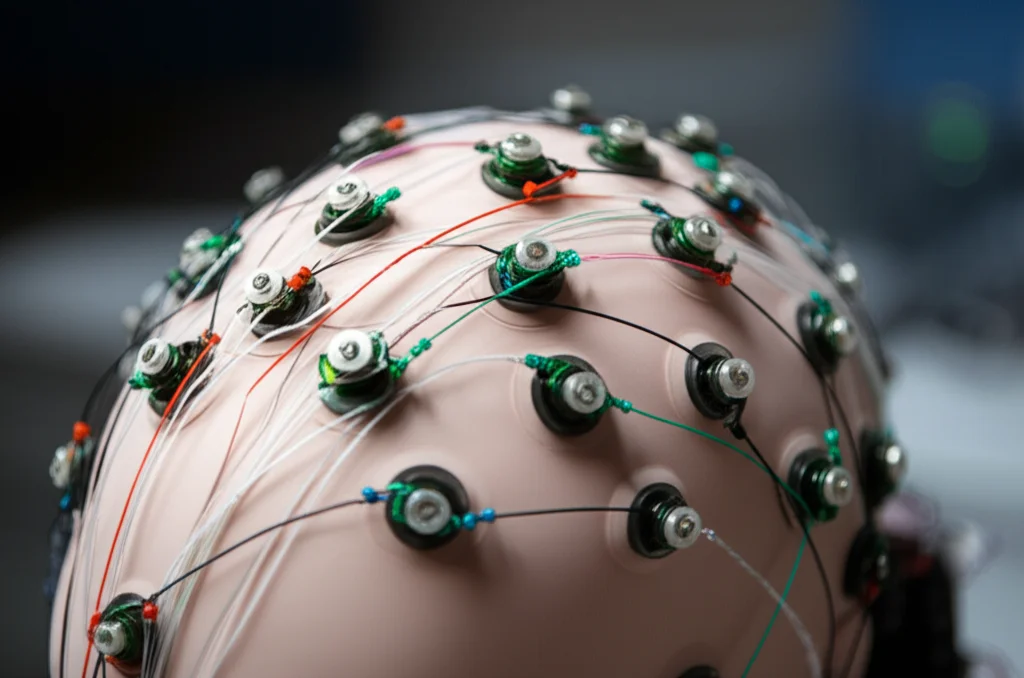

They recruited a group of young adults (mostly students) with normal hearing and no neurological issues. They hooked them up with the fNIRS cap – it looks a bit like a swim cap with wires – positioned over those prefrontal and temporal areas. The participants sat in a quiet room, closed their eyes, and just listened. The experiment cycled between periods of rest and periods of listening to the AI voices (first an unfamiliar one, then the familiar AI mom voice). All while the fNIRS gadget was recording the changes in blood oxygen levels.

After the listening part, they asked the participants about their experience, especially when they heard the AI mother’s voice. Did they feel anything? Did they remember anything specific?

Okay, So What Happened?

This is where it gets interesting. When they crunched the fNIRS data, the results were pretty clear. In both experiments (comparing AI mom to the middle-aged woman, and comparing AI mom to the sweet young woman), the AI-synthesized maternal voice caused significantly *higher* activation in both the prefrontal and temporal cortices. We’re talking about a noticeable increase in oxygenated hemoglobin – the signal that says, “Hey, this part of the brain is busy!”

The statistical analysis backed this up, showing that the type of voice had a significant impact on brain activity. It wasn’t just random noise; the familiar AI voice was genuinely triggering a stronger response in those brain areas linked to emotion, memory, and processing familiar sounds.

And the participant feedback? Yep, many reported recalling memories or feeling emotional connections when listening to the AI version of their mother’s voice. This lines up nicely with the brain data – those activated areas are exactly where you’d expect memory and emotion processing to happen.

Why This Is Kind of a Big Deal

So, what’s the takeaway? This study provides some solid neuroscientific evidence that AI-synthesized voices of people we know and care about can actually influence our brain activity in a meaningful way. It’s not just about how natural the AI voice sounds; it’s about the *familiarity* and the connections that voice triggers.

Think about the possibilities! This isn’t just a cool science experiment; it has real-world potential. Imagine AI companions for the elderly that can speak in the voice of a distant family member, helping to ease loneliness. Or mental health applications that use a comforting, familiar-sounding voice for guided meditations or therapy exercises. It could make interacting with technology feel less cold and more… human.

It also shows that using fNIRS to study AI voice interaction is a really innovative approach. Instead of just asking people if they liked the voice (which is subjective), you can actually see the brain reacting. That’s pretty powerful!

A Few Caveats (Because Science Isn’t Perfect)

Of course, like any study, this one had its limits. The sample size was relatively small, and the participants were all young adults, so we can’t necessarily say this applies to everyone. The fNIRS tech, while great for this kind of study, doesn’t give super-precise locations within the brain like an MRI would. Also, the experiment had a fixed order (unfamiliar voice first, then familiar), which *could* potentially have a small effect, though they tried to minimize it with rest periods.

And yes, the AI voices weren’t *perfectly* indistinguishable from real human voices, though the study found this difference didn’t seem to affect the core finding about familiar voices triggering brain responses. It just goes to show that while AI is getting amazing, the nuances of human voice and emotion are incredibly complex.

Looking Ahead

Despite the limitations, this research opens up exciting avenues. Future studies could look at different levels of familiarity, or explore how AI voices affect people with specific conditions like anxiety or depression. The potential for using personalized AI voices to enhance emotional well-being and make technology feel more connected is huge.

Ultimately, this study gives us a fascinating glimpse into how our brains react to familiar sounds, even when they’re generated by artificial intelligence. It suggests that the emotional and cognitive power of a voice isn’t just about the sound waves themselves, but about the connections and memories they trigger. And that’s a powerful idea for the future of human-AI interaction.

Source: Springer