AI Scans Predict Cancer Recurrence: A Breakthrough in Nasopharyngeal Care

Hey there! Let’s talk about something pretty important in the world of cancer treatment, specifically for a type called nasopharyngeal carcinoma (NPC). If you’re not familiar, NPC is a head and neck cancer that’s quite common in Southeast Asia. The standard treatment for locally advanced cases often involves a tough regimen of chemotherapy and radiotherapy. It’s effective for many, but here’s the kicker: even after all that, a significant number of patients face the dreaded return of the cancer, either locally or spreading elsewhere.

That’s a tough pill to swallow, right? You go through intense treatment, hoping you’re in the clear, only to have the cancer come back. This uncertainty is a huge challenge for both patients and doctors. Wouldn’t it be amazing if we could get a better idea *who* is at higher risk of recurrence *after* their initial treatment? That way, doctors could potentially tailor follow-up plans or interventions for those who need it most.

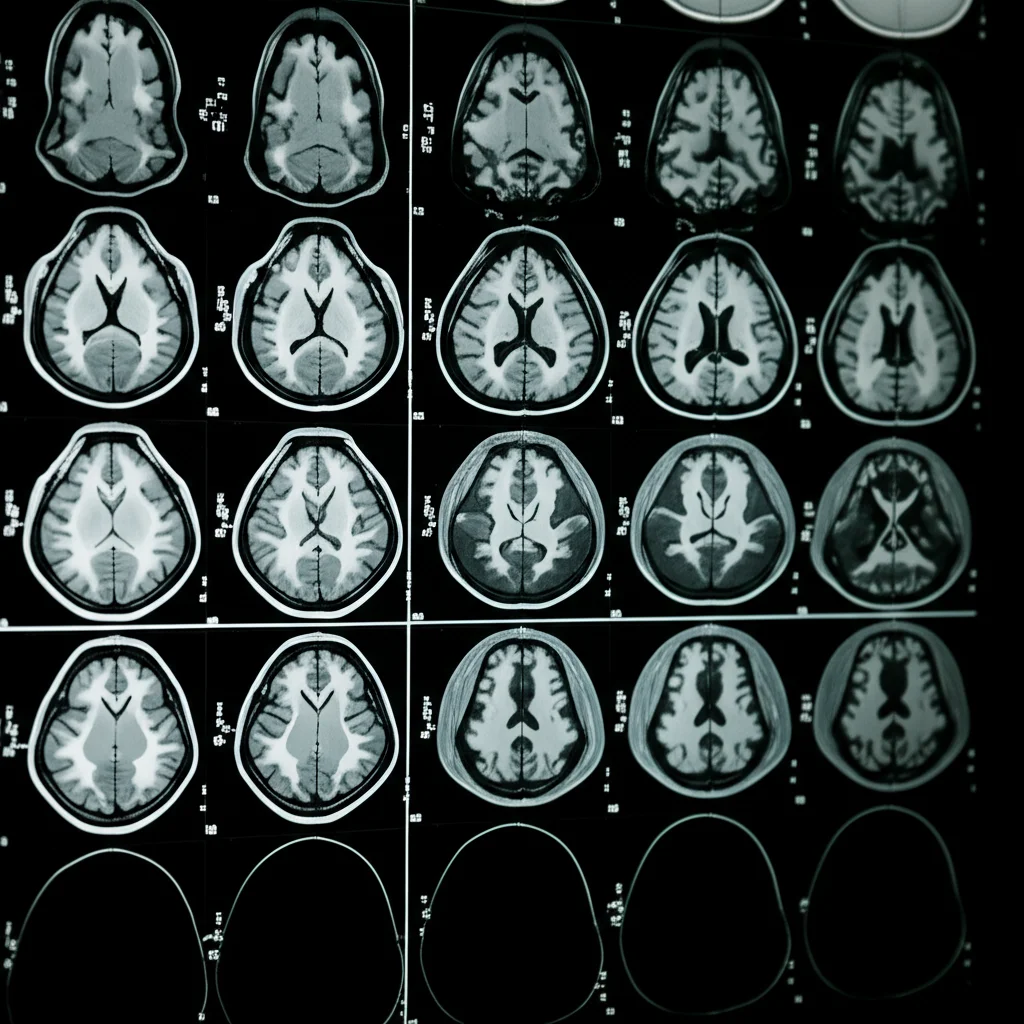

This is where some really cool technology comes into play – specifically, using MRI scans and something called radiomics, powered by artificial intelligence (AI), including deep learning. Think of it like this: MRI scans give us incredibly detailed pictures of the inside of the body. Radiomics is a fancy term for extracting a ton of quantitative data – numbers, patterns, textures – from these images that you can’t necessarily see just by looking. And deep learning? That’s a powerful type of AI that can learn complex patterns from this data.

So, the big idea is: Can we use AI to look at MRI scans taken *after* initial treatment (in this case, neoadjuvant chemoradiotherapy, or NACT) and predict which patients are likely to see their cancer return within, say, five years? That’s exactly what this study we’re diving into aimed to find out.

What We Set Out to Do

Our goal was pretty clear: validate if MRI-based radiomic models, especially those incorporating deep learning, could effectively predict recurrence in patients with locally advanced NPC after they’d gone through NACT. We wanted to see if these models could add real clinical value.

To do this, we pulled together data from 328 NPC patients across four different hospitals. This multi-center approach is super important because it helps make sure our findings aren’t just specific to one place or one type of scanner. We split these patients into two groups: a training group (where the AI models learned) and a validation group (where we tested how well the learned models performed on new, unseen data).

Digging into the Scans: Radiomics and Deep Learning

We focused on two specific types of MRI sequences: contrast-enhanced T1-weighted (T1WI+C) and T2-weighted (T2WI). These sequences highlight different tissue properties and can give us complementary information about the tumor.

From these scans, we extracted two main types of features:

- Traditional Radiomic Features: These are the classic quantitative measures derived from images – things like shape, size, intensity, and texture patterns. We got a whopping 975 of these from each sequence!

- Deep Radiomic Features: This is where the deep learning comes in. We used a pre-trained AI model (specifically, a ResNet50) to automatically extract another 1000 features from each sequence. These deep features are often less intuitive for humans to understand but can capture incredibly complex patterns the AI has learned.

Because the data came from multiple centers, there could be slight variations due to different scanners or protocols. To make sure this didn’t mess up our results, we used a technique called ComBat correction. Think of it as a way to harmonize the data, making sure the features we extracted were truly related to the tumor and not just the hospital where the scan was done. It worked well, reducing those pesky technical variations.

With all these features, we had a lot of data! Too much, in fact. Using too many features can make models overcomplicated and less reliable. So, we used a method called LASSO (Least Absolute Shrinkage and Selection Operator) to pick out the most important features – the ones that were best at predicting recurrence. LASSO helped us narrow down the list significantly.

Building and Testing the Models

Once we had our selected features, we built three different types of predictive models:

- Model I: Used only the selected traditional radiomic features.

- Model II: Combined the selected traditional radiomic features with the selected deep radiomic features.

- Model III: Combined Model II’s features (traditional + deep radiomics) with some basic clinical features (age, gender, T-stage, N-stage). We were limited in the clinical data available from all centers, which is a common challenge in multi-center studies.

We then trained and tested these three models using five different machine learning classifiers (basically, different AI algorithms that learn to classify or predict). We evaluated how well each model performed using standard metrics like AUC (Area Under the ROC Curve), accuracy, sensitivity, and specificity. AUC is a good overall measure of how well a model distinguishes between patients who will recur and those who won’t.

What We Found: The Results Are In!

Okay, so what did the models tell us?

First off, the clinical characteristics (age, gender, stage) didn’t show significant differences between the recurrence and non-recurrence groups in our cohorts. This might explain why adding these limited clinical features in Model III didn’t always boost performance significantly.

After feature selection, we ended up with a smaller, more manageable set of features:

- From T1WI+C: 15 traditional radiomic features and 6 deep radiomic features.

- From T2WI: 9 traditional radiomic features and 6 deep radiomic features.

This shows that LASSO successfully identified key patterns from both types of features.

Now for the performance! We looked at the results in the validation cohort (the real test of how well the models generalize).

* In the T1WI+C sequence, Model III (combining everything) using the Naive Bayes classifier performed best, with an AUC of 0.76. Model I (traditional only) and Model II (traditional + deep) had slightly lower AUCs (around 0.71-0.75).

* But here’s where it gets really interesting: In the T2WI sequence, the models performed *overall better* than on T1WI+C. And the star performer was Model II (traditional + deep radiomics) using the Random Forest classifier, hitting an impressive AUC of 0.87 in the validation cohort! Model I (traditional only) on T2WI was good too (AUC around 0.80-0.84), but Model II clearly showed an improvement by adding those deep learning features. Model III on T2WI was also good (AUC around 0.82-0.85), but Model II edged it out.

This suggests that the T2WI sequence might capture information particularly relevant to recurrence prediction, and combining traditional radiomics with deep learning features from T2WI seems to be the winning combination in our study.

The traditional features selected often related to tumor shape and texture (like NGTDM and GLSZM features), which previous studies have linked to tumor heterogeneity – basically, how varied the cells and structure are within the tumor. This heterogeneity is often associated with more aggressive cancers. The deep features are harder to visualize or explain in simple terms, but their inclusion clearly boosted the models’ predictive power.

Why This is a Big Deal

So, what does this all mean? It means that using AI to analyze standard MRI scans *after* initial treatment holds significant promise for predicting which LA-NPC patients are at high risk of recurrence. This isn’t just a cool academic exercise; it has real-world implications.

If a doctor can use a tool like this to identify a patient as high-risk, they might recommend more frequent follow-up scans, consider additional or different therapies, or enroll the patient in clinical trials for new treatments. Conversely, identifying low-risk patients could potentially allow for less intensive follow-up, reducing anxiety and healthcare costs. It’s about moving towards more personalized, data-driven patient care.

The Road Ahead: Limitations and Future Fun

Now, no study is perfect, and ours has limitations, which are important to acknowledge and learn from.

- First, while we had data from four centers, the sample size from three of them was smaller. We combined the data, but getting even more external validation data from completely different hospitals is crucial to ensure these models work everywhere.

- Second, those deep radiomic features are powerful, but they’re like a black box. We know they help prediction, but *why*? Visualizing or understanding what patterns the AI is picking up is a challenge we need to tackle.

- Third, our clinical data was limited to age, gender, and stage. Future studies should definitely include more factors like specific treatment details (exact radiation dose, chemotherapy drugs), tumor biomarkers (like HPV status, which is relevant for NPC), and overall patient health status. More data points can potentially lead to even better predictions.

- Finally, we focused on MRI because it’s great for soft tissues like the nasopharynx. But combining data from other scans like CT or PET might provide even more comprehensive information and improve predictions further.

Also, we noticed that while our models had a good overall ability to distinguish between groups (high AUC), the sensitivity (correctly identifying *all* patients who would recur) was sometimes a bit low. This might be a side effect of the ComBat correction we used to harmonize data across centers – it’s great for reducing technical noise, but it might smooth out some subtle biological differences that are key to catching every single recurrence case. Finding the perfect balance between data harmonization and preserving predictive biological signals is an ongoing area of research.

Despite these limitations, the results are exciting. Combining traditional image analysis with the power of deep learning from standard MRI scans provides a valuable tool for predicting recurrence in LA-NPC patients after NACT.

Wrapping It Up

So, there you have it. We took a look at how AI, specifically deep learning applied to MRI radiomics, can help predict the future for patients battling locally advanced nasopharyngeal carcinoma after their initial treatment. By extracting hidden patterns from standard scans and combining them with smart algorithms, we built models that show real promise in identifying those at higher risk of recurrence.

This isn’t the final word, of course. There’s always more to learn, more data to analyze, and models to refine. But this study adds another piece to the puzzle, demonstrating that AI-driven analysis of medical images is a powerful tool that can assist doctors in making better, more informed decisions, ultimately leading to improved outcomes for patients. It’s a step forward in leveraging technology to fight cancer more effectively.

Source: Springer