Dancing with Algorithms: How AI is Revolutionizing Ethnic Dance Instruction

Hey there! Ever watched someone perform an amazing ethnic dance? You know, the kind with intricate steps, flowing movements, and a vibe that just screams culture and history? It’s absolutely captivating, isn’t it? These dances are like living museums, carrying stories and traditions through generations. But teaching these complex, culturally-rich movements? That’s a whole other ballgame. It’s challenging, takes ages to master, and sometimes, those subtle cultural nuances can get lost in translation.

The Problem with the Old Ways

So, picture this: traditional ethnic dance instruction often relies heavily on teachers demonstrating moves over and over. While there’s magic in that personal connection, it can be super time-consuming. Students might miss tiny details – the exact angle of a hand in Miao dance, or the specific footwork in Tibetan dance – because, let’s be honest, humans aren’t perfect playback machines! Plus, getting the *feeling* and the *cultural context* across is tough when you’re just focused on the steps. And personalized feedback for every student? In a big class, that’s almost impossible. Teachers are amazing, but they can only do so much. This is where adapting to modern tech becomes crucial. We need tools that can help make learning more effective, personalized, and maybe even more culturally immersive.

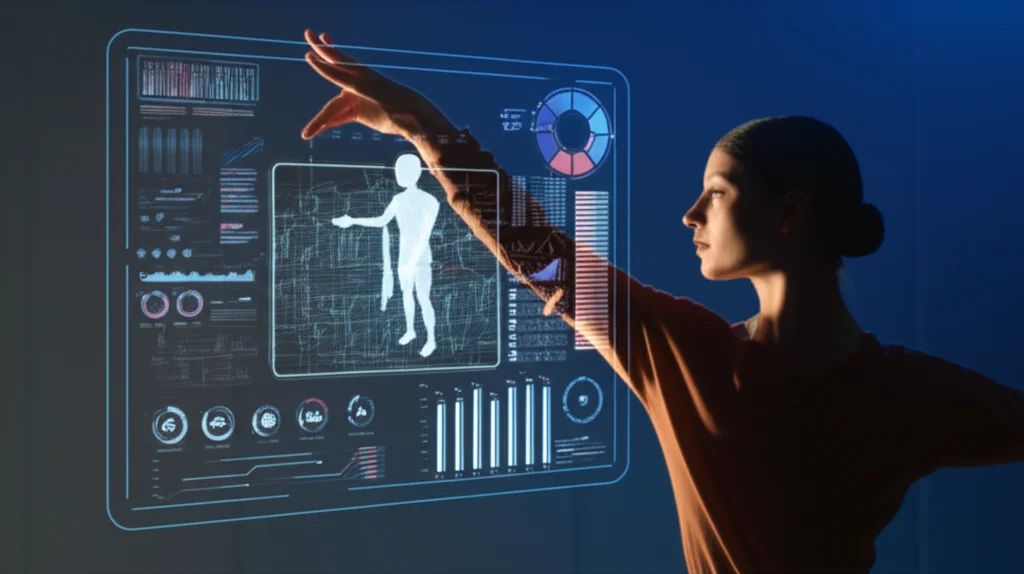

Enter AI and 3D Vision

This is where artificial intelligence steps onto the dance floor, and it’s pretty exciting! Researchers are looking at how AI, specifically a type called Deep Learning (DL), can help. Think of DL as teaching a computer to learn from tons of examples, just like a student learns from watching a teacher, but way faster and with incredible detail. Convolutional Neural Networks (CNNs) are particularly good at looking at images and videos. They can spot patterns, shapes, and movements.

Now, traditional CNNs are mostly 2D – they look at flat images. But dance isn’t flat, right? It happens in 3D space, and it unfolds over *time*. That’s why this research focuses on *three-dimensional* CNNs (3D-CNNs). These clever networks can look at a sequence of video frames all at once, capturing not just *where* a dancer is, but *how* they’re moving and changing over moments. They can see the flow, the trajectory, the subtle shifts that make a dance unique.

To make the AI even better at handling complex, deep information (like a long dance sequence), the researchers combined 3D-CNNs with something called a Residual Network, or ResNet. Think of ResNet as giving the AI shortcuts to remember things it learned earlier, preventing it from getting ‘tired’ or ‘forgetting’ details as it processes deeper layers of information. This combo, the 3D-ResNet model, is designed specifically to tackle the unique challenges of ethnic dance movements.

Building the Brain: How the Model Works

Okay, how do you teach a computer to recognize a Miao dance step from a Mongolian one? It starts with data – lots of it! The researchers collected videos of six specific ethnic dances:

- Miao

- Dai

- Tibetan

- Uygur

- Mongolian

- Yi

They gathered performance videos and teaching materials, making sure they had a good mix of styles and dancers. To make it easier for the AI to understand the movements, they simplified the human body into a 3D skeleton model. Imagine a stick figure with 25 key points (joints) tracked in 3D space across each frame of the video. This skeleton data is great because it focuses purely on the movement, ignoring distracting backgrounds or clothing.

The 3D-ResNet model then takes these video segments (like short clips) as input. It has multiple layers of 3D convolutional layers, pooling layers, and those helpful residual blocks. The convolutional layers are like filters that scan the video in 3D (width, height, and time) to find interesting features – a quick arm swing, a sudden jump, a specific posture. Pooling layers shrink the data down while keeping the most important features. The residual blocks help the network go deeper without losing performance. Finally, the features are passed to fully connected layers that classify the movement – “Ah, that’s a Tibetan ceremonial gesture!”

![]()

They also used techniques like Dropout during training, which is like randomly telling some parts of the network to take a break. This prevents the AI from becoming *too* specialized on the training data and helps it generalize better to new, unseen dance moves.

Putting it to the Test: The Big Performance

So, did it work? The researchers tested their 3D-ResNet model on their own dataset of the six ethnic dances and also on a large public dataset called NTU-RGBD60, which has various human actions. The results were seriously impressive!

On their self-built dataset, the model achieved an accuracy of **96.70%**. On the NTU-RGBD60 database, it hit **95.41%**. That’s incredibly high accuracy for recognizing complex movements!

They also compared their 3D-ResNet model to other AI approaches like standard 3D-CNNs, ResNet alone, basic CNNs, and some other recent algorithms. Their model consistently outperformed the others, showing a significant improvement in accuracy (at least 3.85% better on their dataset). This means the combination of 3D convolution for spatio-temporal features and residual connections for depth really pays off for dance recognition.

They even looked at how well it recognized each of the six specific dances. The 3D-ResNet model was the most accurate across *all* dance types, even for dances like Miao and Yi, which had fewer samples initially. They used data augmentation (like slightly altering existing videos) to help with the sample imbalance for dances like Yi, boosting the model’s learning ability.

An interesting part was adding a Temporal Attention Module (TAM). This module helps the AI focus on the most important moments in a dance sequence. For example, in a fast spin, it might pay more attention to the frames where the arms are swinging most dramatically. Adding TAM slightly increased accuracy (e.g., Miao dance accuracy went from 92.86% to 95.13%), though it added a tiny bit to the processing time.

They also did cross-dataset testing, mixing their data with the public one and adding another dataset (AIST++). While accuracy dropped a bit on the completely different style of AIST++ (Western contemporary dance), their 3D-ResNet still did much better than older methods like 2D-CNNs or GCNs in these cross-cultural tests. This shows it has better *generalization* capability across different dance styles, which is super important for a tool meant to help with diverse ethnic dances.

They also checked how efficient the model was. It can process a frame in about 18.2 milliseconds, which is fast enough for real-time applications (you need over 30 frames per second for smooth video). This is faster than some other complex models like GCNs or Transformers, while still being highly accurate.

More Than Just Moves: Cultural Sensitivity

Now, here’s a really crucial point: you can’t just build a tech tool for ethnic dance without thinking about the culture behind it. These dances are sacred to the communities they come from. The researchers stressed that AI tools in this space must be built with cultural sensitivity and awareness. They need to be conduits for *preserving* and *respecting* cultural diversity, not just technical gadgets.

They emphasized collaborating closely with cultural experts throughout the process. For instance, when annotating the movements (telling the AI what each move is), they worked with experts to understand the cultural meaning and significance, especially for complex details like finger arrangements in Miao dance or ritual actions in Tibetan dance. This helps avoid cultural bias in the data the AI learns from.

They also talked about making the AI tool’s user interface culturally adaptable – maybe offering different language options or designs that resonate with different cultural aesthetics. And importantly, they suggested building features that encourage cultural dialogue among users, like forums where students and teachers can share their understanding and feelings about the dances. It’s about fostering mutual respect and understanding.

They acknowledge that avoiding cultural bias is an ongoing challenge, especially when annotating movements that have deep symbolic meaning in one culture but not another. It requires continuous iteration and refinement based on feedback from cultural experts and users.

The Big Picture e What’s Next

So, what does this all mean for ethnic dance education? It means we’re getting powerful new tools!

- Improved Teaching: AI can provide accurate, real-time feedback on movements, helping students grasp complex steps faster. Imagine an AI spotting that your arm angle is slightly off in a Dai dance move and giving you instant pointers!

- Cultural Preservation: By accurately recording and analyzing these dances, AI can help create digital archives, preserving endangered forms for future generations.

- Wider Access: AI-powered systems could potentially make high-quality ethnic dance instruction more accessible, even in areas without many expert teachers.

- Innovation: It opens doors for new ways of learning, maybe even interactive experiences using VR.

It’s not without its challenges, though. As mentioned, data is key, and getting enough diverse data for *all* the world’s ethnic dances is a huge task. The current model recognizes the *form* of the movement but doesn’t understand the deep *cultural meaning* behind it (like the ritual significance of a gesture). Also, scaling this up for a large online class would require more computational power.

But the researchers are already thinking about the future. They want to expand the dataset to include even more ethnic dance styles from different regions. They’re exploring ways to make the model more adaptable, perhaps allowing users to upload videos of local dances so the AI can learn from them. And crucially, they plan to work with instructors and cultural scholars to build a “movement-culture” knowledge base, helping the AI (and the users) understand the *why* behind the moves, not just the *how*.

Ultimately, this research is a fantastic step forward. It shows that AI isn’t just for self-driving cars or recommending movies; it can be a powerful ally in preserving and teaching beautiful, culturally significant art forms like ethnic dance. It’s about blending cutting-edge technology with ancient traditions, helping these incredible dances continue to thrive in the digital age.

Source: Springer